Rules to Better Code

Do you refactor your code and keep methods short?

Refactoring is all about making code easier to understand and cheaper to modify without changing its behavior.

As a rule of thumb, no method should be greater than 50 lines of code. Long-winded methods are the bane of any developer and should be avoided at all costs. Instead, a method of 50 lines or more should be broken down into smaller functions.

Do you know when functions are too complicated?

You should generally be looking for ways to simplify your code (e.g. removing heavily-nested case statements). As a minimum, look for the most complicated method you have and check whether it needs simplifying.

In Visual Studio, there is built-in support for Cyclomatic Complexity analysis.

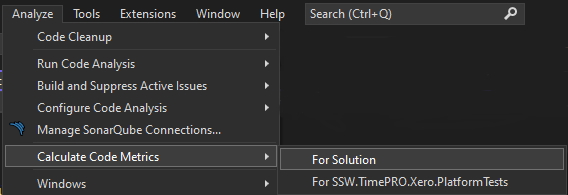

- Go to Analyze | Calculate Code Metrics | For Solution

Figure: Launching the Code Metrics tool within Visual Studio

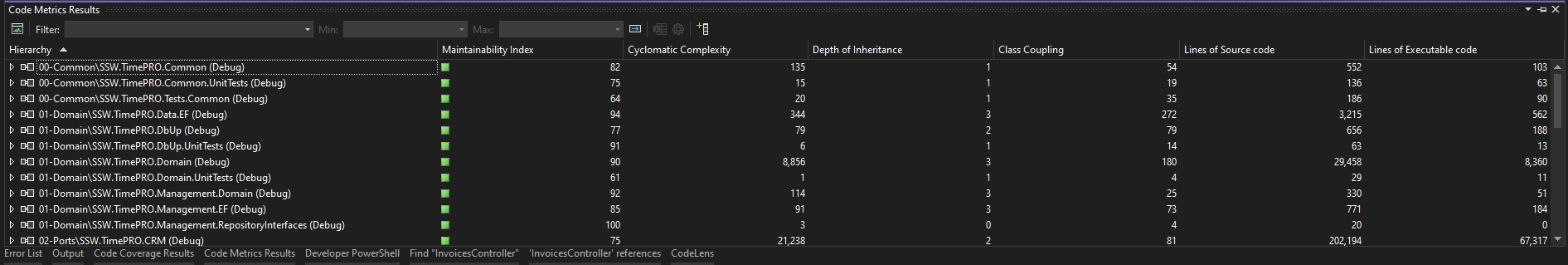

- Look at the function with the largest Cyclomatic Complexity number and consider refactoring to make it simpler.

Figure: Results from cyclomatic analysis (and other analyses) give an indication of how complicated functions are

Tip: Aim for "green" against each function's Maintainability Index.

Do you use AI pair programming?

Too often, developers are writing a line of code, and they forget that little bit of syntax they need to do what they want. In that case, they usually end up googling it to find the documentation and then copy and paste it into their code. That process is a pain because it wastes valuable dev time. They might also ask another dev for help.

Not to worry, AI pair programming is here to save the day!

Video: 6 ways GitHub Copilot helps you write better code faster (7 min)Video: Say hello to GitHub Copilot Enterprise! (17 min)New tools like GitHub Copilot provide devs with potentially complete solutions as they type. It might sound like it's too good to be true, but in reality you can do so much with these tools.

"It’s hard to believe that GitHub Copilot is actually an AI and not a Mechanical Turk. The quality of the code is at the very least comparable to my own (and in fairness that's me bragging), and it's staggering to see how accurate it is in determining your needs, even in the most obscure scenarios." - Matt Goldman

What can it do?

There is a lot to love with AI pair programming ❤️, here is just a taste of what it can do:

Help with writing code

- Populate a form

- Do complex maths

- Create DTOs

- Hydrate data

- Query APIs

- Do unit tests

GitHub Copilot - Help with reading and understanding code

- Generate Pull Request summaries

- Explore and learn about a codebase

- Understand PBIs and how to implement them

Some tools that offer this are GitHub Copilot or Codeium (not to be confused with Codium).

Why is it awesome?

AI pair programming has so much to offer, here are the key benefits:

- Efficiency - Less time doing gruntwork like repetitive tasks and making boilerplate

- Learnability - Quick suggestions in heaps of languages, such as:

- C#

- JavaScript

- SQL, and many more

✅ Figure: Good example - GitHub Copilot saves you oodles of time!

Do you look for duplicate code?

Code duplication is a big "code smell" that harms maintainability. You should keep an eye out for repeated code and make sure you refactor it into a single place.

For example, have a look at these two Action methods in an MVC 4 controller.

//// GET: /Person/[Authorize]public ActionResult Index(){// get company this user can viewCompany company = null;var currentUser = Session["CurrentUser"] as User;if (currentUser != null){company = currentUser.Company;}// show people in that companyif (company != null){var people = db.People.Where(p => p.Company == company);return View(people);}else{return View(new List());}}//// GET: /Person/Details/5[Authorize]public ActionResult Details(int id = 0){// get company this user can viewCompany company = null;var currentUser = Session["CurrentUser"] as User;if (currentUser != null){company = currentUser.Company;}// get matching personPerson person = db.People.Find(id);if (person == null || person.Company == company){return HttpNotFound();}return View(person);}❌ Figure: Figure: Bad Example - The highlighted code is repeated and represents a potential maintenance issue.

We can refactor this code to make sure the repeated lines are only in one place.

private Company GetCurrentUserCompany(){// get company this user can viewCompany company = null;var currentUser = Session["CurrentUser"] as User;if (currentUser != null){company = currentUser.Company;}return company;}//// GET: /Person/[Authorize]public ActionResult Index(){// get company this user can viewCompany company = GetCurrentUserCompany();// show people in that companyif (company != null){var people = db.People.Where(p => p.Company == company);return View(people);}else{return View(new List());}}// GET: /Person/Details/5[Authorize]public ActionResult Details(int id = 0){// get company this user can viewCompany company = GetCurrentUserCompany();// get matching personPerson person = db.People.Find(id);if (person == null || person.Company == company){return HttpNotFound();}return View(person);}✅ Figure: Figure: Good Example - The repeated code has been refactored into its own method.

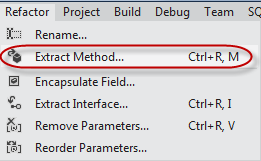

Tip: The Refactor menu in Visual Studio 11 can do this refactoring for you.

Figure: The Extract Method function in Visual Studio's Refactor menu

Do you maintain separation of concerns?

One of the major issues people had back in the day with ASP (before ASP.NET) was the prevalence of "Spaghetti Code". This mixed Reponse.Write() with actual code.

Ideally, you should keep design and code separate - otherwise, it will be difficult to maintain your application. Try to move all data access and business logic code into separate modules.

Bob Martin explains this best:

Do you follow naming conventions?

It's the most obvious - but naming conventions are so crucial to simpler code, it's crazy that people are so loose with them...

For Javascript / Typescript

Google publishes a JavaScript style guide. For more guides, please refer to this link: Google JavaScript Style Guide

Here are some key points:

- Use const or let – Not var

- Use semicolons

- Use arrow functions

- Use template strings

- Use uppercase constants

- Use single quotes

See 13 Noteworthy Points from Google’s JavaScript Style Guide

For C# Java

See chapter 2: Meaningful Names Clean Code: A Handbook of Agile Software Craftsmanship

For SQL (see Rules to Better SQL Databases)

Do you use the testing stage, in the file name?

When moving through the different stages of testing i.e. from internal testing, through to UAT, you should suffix the application name with the appropriate stage:

Stage Testing Description Naming Convention Alpha Developer testing with project team Northwind_v2-3_alpha.exe Beta Internal “Test Please" testing with non-project working colleagues Northwind_v2-3_beta.exe Production e.g. When moving onto production, this naming convention is dropped Northwind_v2-3.exe Do you avoid using spaces in folder and file names?

It is not a good idea to have spaces in a folder or file name as they don't translate to URLs very well and can even cause technical problems.

Instead of using spaces, we recommend:

- kebab-case - using dashes between words

Other not recommended options include:

- CamelCase - using the first letter of each word in uppercase and the rest of the word in lowercase

- snake_case - using underscores between words

For further information, read Do you know how to name documents?

This rule should apply to any file or folder that is on the web. This includes Azure DevOps Team Project names and SharePoint Pages.

- extremeemailsversion1.2.doc

- Extreme Emails version 1.2.doc

❌ Figure: Bad examples - File names have spaces or dots

- extreme-emails-v1-2.doc

- Extreme-Emails-v1-2.doc

✅ Figure: Good examples - File names have dashes instead of spaces

- sharepoint.ssw.com.au/Training/UTSNET/Pages/UTS%20NET%20Short%20Course.aspx

- fileserver/Shared%20Documents/Ignite%20Brisbane%20Talk.docx

❌ Figure: Bad examples - File names have been published to the web with spaces so the URLs look ugly and are hard to read

- sharepoint.ssw.com.au/Training/UTS-NET/Pages/UTS-NET-Short-Course.aspx

- fileserver/Shared-Documents/Ignite-Brisbane-Talk.docx"

✅ Figure: Good examples - File names have no spaces so are much easier to read

Do you start versioning at 0.1 and change to 1.0 once approved by a client or tester?

Software and document version numbers should be consistent and meaningful to both the developer and the user.

Generally, version numbering should begin at 0.1. Once the project has been approved for release by the client or tester, the version number will be incremented to 1.0. The numbering after the decimal point needs to be decided on and uniform. For example, 1.1 might have many bug fixes and a minor new feature, while 1.11 might only include one minor bug fix.

Do you know how to avoid problems in if-statements?

Try to avoid problems in if-statements without curly brackets and just one statement which is written one line below the if-statement. Use just one line for such if-statements. If you want to add more statements later on and you could forget to add the curly brackets which may cause problems later on.

if (ProductName == null) ProductName = string.Empty; if (ProductVersion == null)ProductVersion = string.Empty; if (StackTrace == null) StackTrace = string.Empty;❌ Figure: Figure: Bad Example

if (ProductName == null){ProductName = string.Empty;}if (ProductVersion == null){ProductVersion = string.Empty;}if (StackTrace == null){StackTrace = string.Empty;}✅ Figure: Figure: Good Example

Do you avoid Double-Negative Conditionals in if-statements?

Try to avoid Double-Negative Conditionals in if-statements. Double negative conditionals are difficult to read because developers have to evaluate which is the positive state of two negatives. So always try to make a single positive when you write if-statement.

if (!IsValid){// handle error}else{// handle success}❌ Figure: Figure: Bad example

if (IsValid){// handle success}else{// handle error}✅ Figure: Figure: Good example

if (!IsValid){// handle error}✅ Figure: Figure: Another good example

Use pattern matching for boolean evaluations to make your code even more readable!

if (IsValid is false){// handle error}✅ Figure: Figure: Even better

C# Code - Do you use string literals?

<introEmbed body={<> Do you know String should be @-quoted instead of using escape character for "\\"? The @ symbol specifies that escape characters and line breaks should be ignored when the string is created. As per: [Strings](<https://docs.microsoft.com/en-us/previous-versions/visualstudio/visual-studio-2008/c84eby0h(v=vs.90?WT.mc_id=DT-MVP-33518)?redirectedfrom=MSDN>) </>} /> ```cs string p2 = "\\My Documents\\My Files\\"; ``` <figureEmbed figureEmbed={{ preset: "badExample", figure: 'Figure: Bad example - Using "\\"', shouldDisplay: true } } /> ```cs string p2 = @"\My Documents\My Files\"; ``` <figureEmbed figureEmbed={{ preset: "goodExample", figure: 'Figure: Good example - Using @', shouldDisplay: true } } /> ## Raw String Literals In C#11 and later, we also have the option to use raw string literals. These are great for embedding blocks of code from another language into C# (e.g. SQL, HTML, XML, etc.). They are also useful for embedding strings that contain a lot of escape characters (e.g. regular expressions). Another advantage of Raw String Literals is that the redundant whitespace is trimmed from the start and end of each line, so you can indent the string to match the surrounding code without affecting the string itself. ```cs var bad = "<html>" + "<body>" + "<p class=\"para\">Hello, World!</p>" + "</body>" + "</html>"; ``` <figureEmbed figureEmbed={{ preset: "badExample", figure: 'Figure: Bad example - Single quotes', shouldDisplay: true } } /> ```cs var good = """ <html> <body> <p class="para">Hello, World!</p> </body> </html> """; ``` <figureEmbed figureEmbed={{ preset: "goodExample", figure: 'Figure: Good example - Using raw string literals', shouldDisplay: true } } /> For more information on Raw String literals see [learn.microsoft.com/en-us/dotnet/csharp/language-reference/tokens/raw-string](https://learn.microsoft.com/en-us/dotnet/csharp/language-reference/tokens/raw-string?WT.mc_id=DT-MVP-33518)Do you add the Application Name in the SQL Server connection string?

You should always add the application name to the connection string so that SQL Server will know which application is connecting, and which database is used by that application. This will also allow SQL Profiler to trace individual applications which helps you monitor performance or resolve conflicts.

<add key="Connection" value="Integrated Security=SSPI;Persist Security Info=False;Initial Catalog=Biotrack01;Data Source=sheep;"/>❌ Figure: Bad example - The connection string without Application Name

<add key="Connection" value="Integrated Security=SSPI;Persist SecurityInfo=False;Initial Catalog=Biotrack01;Data Source=sheep;Application Name=Biotracker"/> <!-- Good Code - Application Name is added in the connection string. -->✅ Figure: Good example - The connection string with Application Name

Do you know how to use Connection Strings?

There are 2 type of connection strings. The first contains only address type information without authorization secrets. These can use all of the simpler methods of storing configuration as none of this data is secret.

Option 1 - Using Azure Managed Identities (Recommended)

When deploying an Azure hosted application we can use Azure Managed Identities to avoid having to include a password or key inside our connection string. This means we really just need to keep the address or url to the service in our application configuration. Because our application has a Managed Identity, this can be treated in the same way as a user's Azure AD identity and specific roles can be assigned to grant the application access to required services.

This is the preferred method wherever possible, because it eliminates the need for any secrets to be stored. The other advantage is that for many services the level of access control available using Managed Identities is much more granular making it much easier to follow the Principle of Least Privilege.

Option 2 - Connection Strings with passwords or keys

If you have to use some sort of secret or key to login to the service being referenced, then some thought needs to be given to how those secrets can be secured. Take a look at Do you store your secrets securely to learn how to keep your secrets secure.

Example - Integrating Azure Key Vault into your ASP.NET Core application

In .NET 5 we can use Azure Key Vault to securely store our connection strings away from prying eyes.

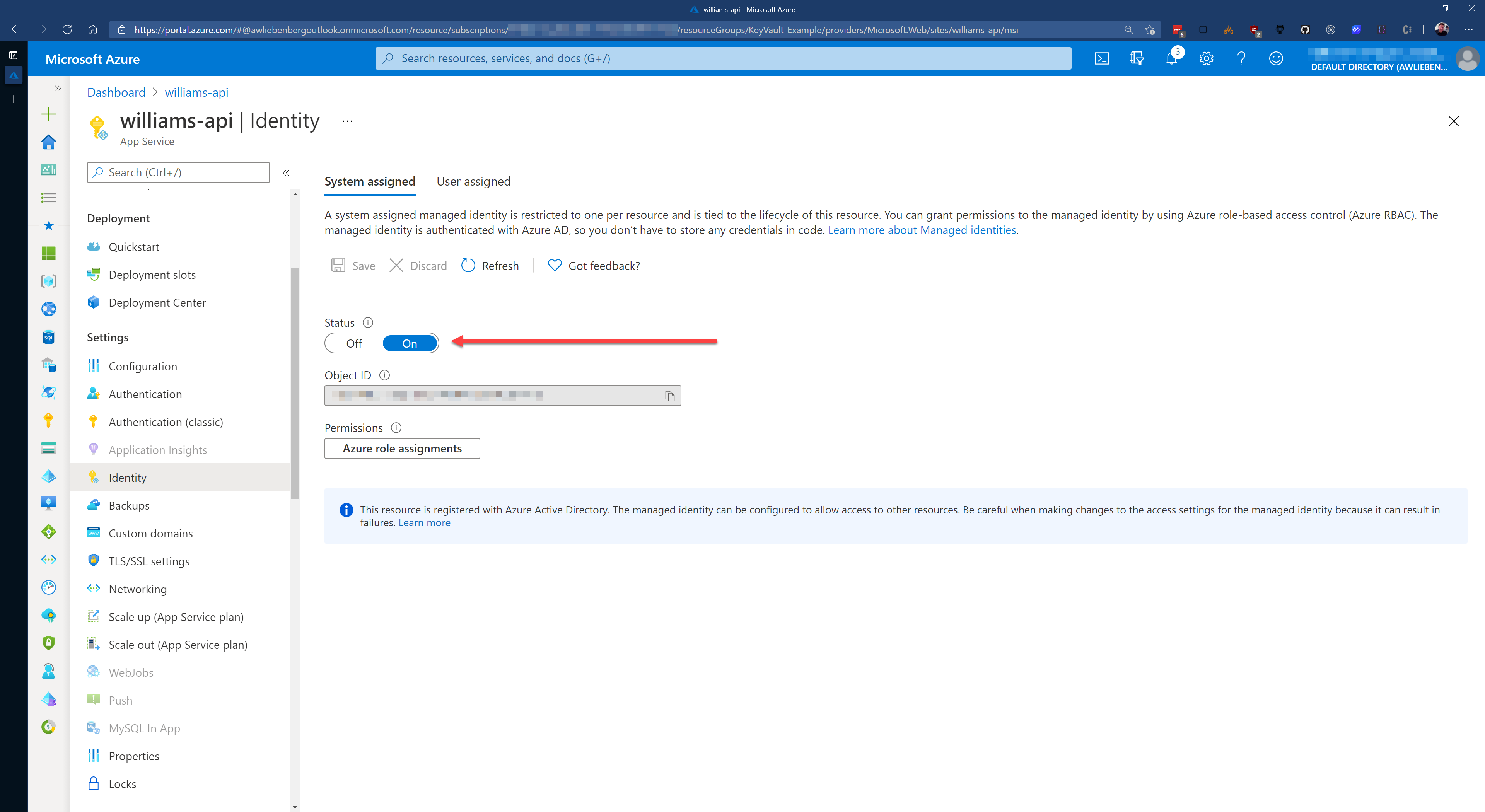

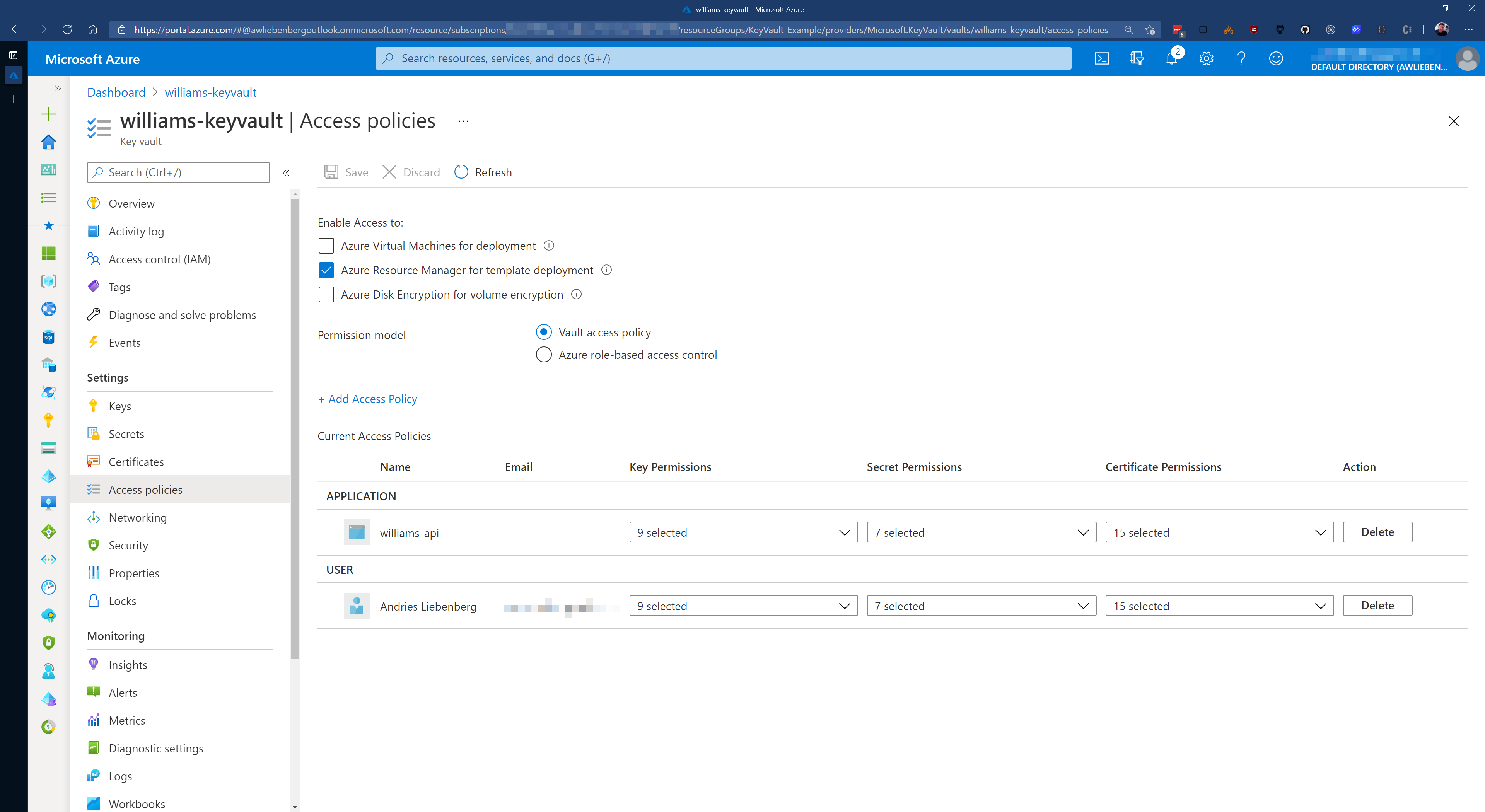

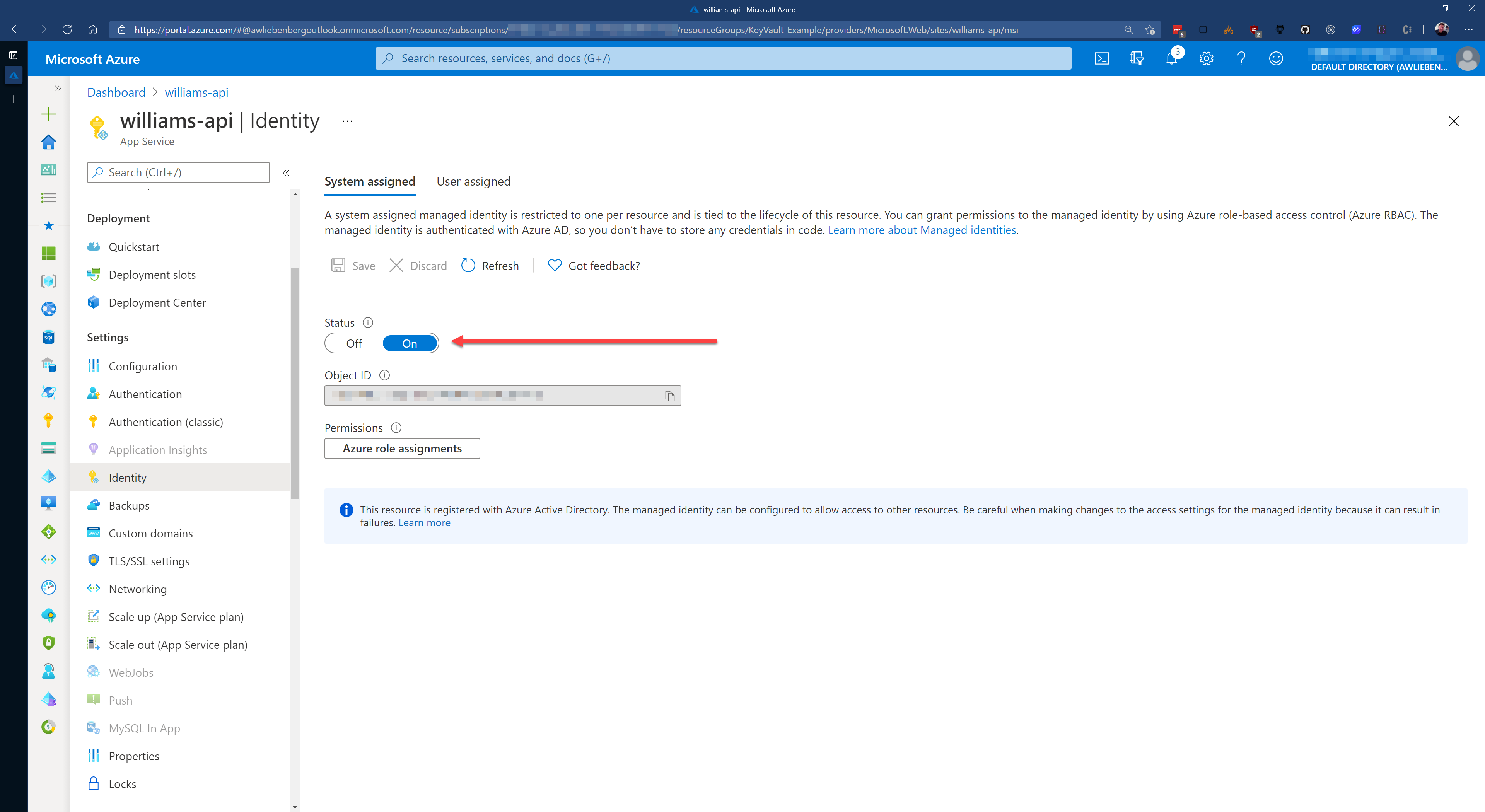

Azure Key Vault is great for keeping your secrets secret because you can control access to the vault via Access Policies. The access policies allows you to add Users and Applications with customized permissions. Make sure you enable the System assigned identity for your App Service, this is required for adding it to Key Vault via Access Policies.

You can integrate Key Vault directly into your ASP.NET Core application configuration. This allows you to access Key Vault secrets via

IConfiguration.public static IHostBuilder CreateHostBuilder(string[] args) =>Host.CreateDefaultBuilder(args).ConfigureWebHostDefaults(webBuilder =>{webBuilder.UseStartup<Startup>().ConfigureAppConfiguration((context, config) =>{// To run the "Production" app locally, modify your launchSettings.json file// -> set ASPNETCORE_ENVIRONMENT value as "Production"if (context.HostingEnvironment.IsProduction()){IConfigurationRoot builtConfig = config.Build();// ATTENTION://// If running the app from your local dev machine (not in Azure AppService),// -> use the AzureCliCredential provider.// -> This means you have to log in locally via `az login` before running the app on your local machine.//// If running the app from Azure AppService// -> use the DefaultAzureCredential provider//TokenCredential cred = context.HostingEnvironment.IsAzureAppService() ?new DefaultAzureCredential(false) : new AzureCliCredential();var keyvaultUri = new Uri($"https://{builtConfig["KeyVaultName"]}.vault.azure.net/");var secretClient = new SecretClient(keyvaultUri, cred);config.AddAzureKeyVault(secretClient, new KeyVaultSecretManager());}});});✅ Figure: Good example - For a complete example, refer to this [sample application](https://github.com/william-liebenberg/keyvault-example)

Tip: You can detect if your application is running on your local machine or on an Azure AppService by looking for the

WEBSITE_SITE_NAMEenvironment variable. If null or empty, then you are NOT running on an Azure AppService.public static class IWebHostEnvironmentExtensions{public static bool IsAzureAppService(this IWebHostEnvironment env){var websiteName = Environment.GetEnvironmentVariable("WEBSITE_SITE_NAME");return string.IsNullOrEmpty(websiteName) is not true;}}Setting up your Key Vault correctly

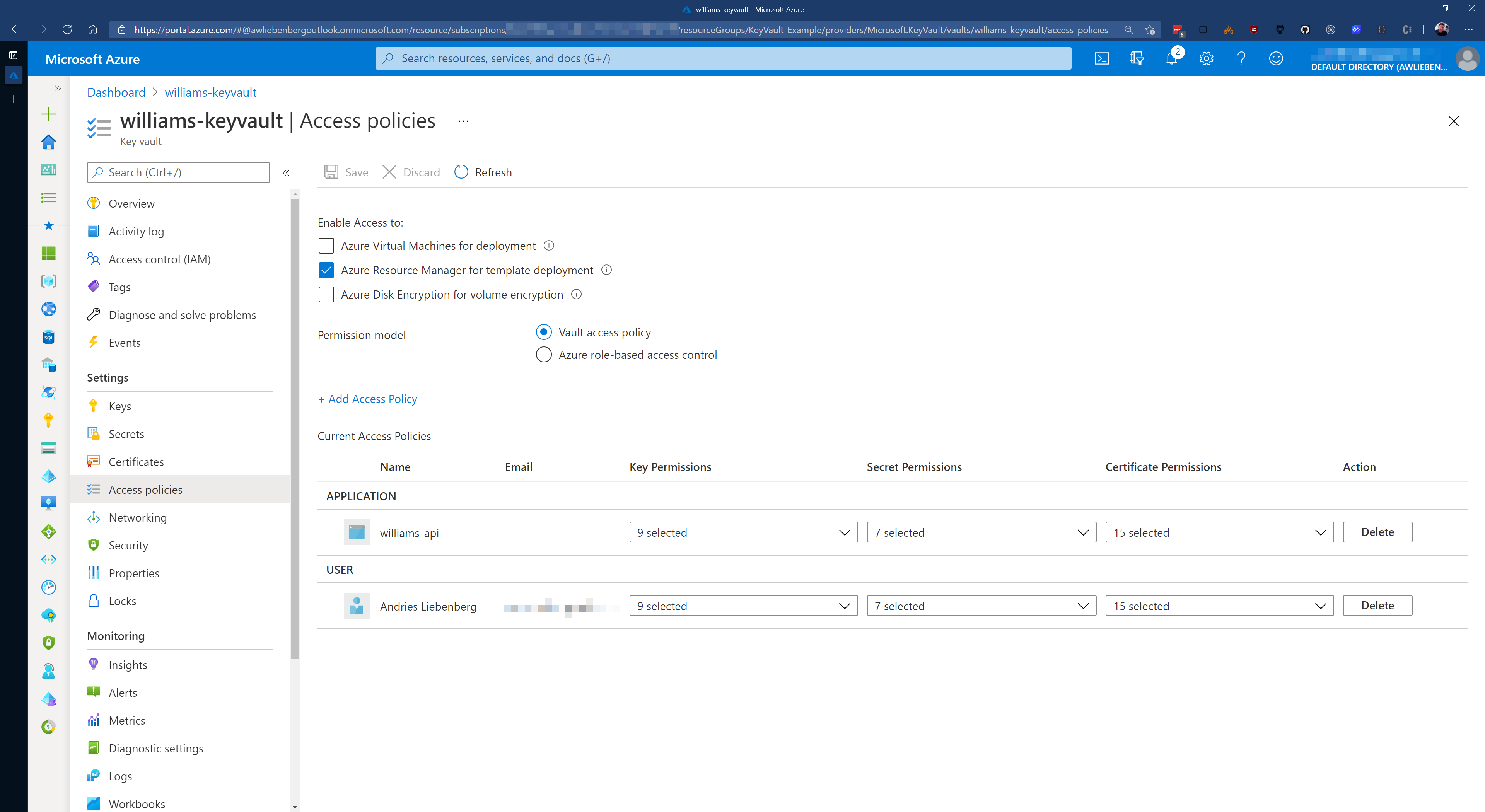

In order to access the secrets in Key Vault, you (as User) or an Application must have been granted permission via a Key Vault Access Policy.

Applications require at least the LIST and GET permissions, otherwise the Key Vault integration will fail to retrieve secrets.

Figure: Key Vault Access Policies - Setting permissions for Applications and/or Users

Azure Key Vault and App Services can easily trust each other by making use of System assigned Managed Identities. Azure takes care of all the complicated logic behind the scenes for these two services to communicate with each other - reducing the complexity for application developers.

So, make sure that your Azure App Service has the System assigned identity enabled.

Once enabled, you can create a Key Vault Access policy to give your App Service permission to retrieve secrets from the Key Vault.

Figure: Enabling the System assigned identity for your App Service - this is required for adding it to Key Vault via Access Policies

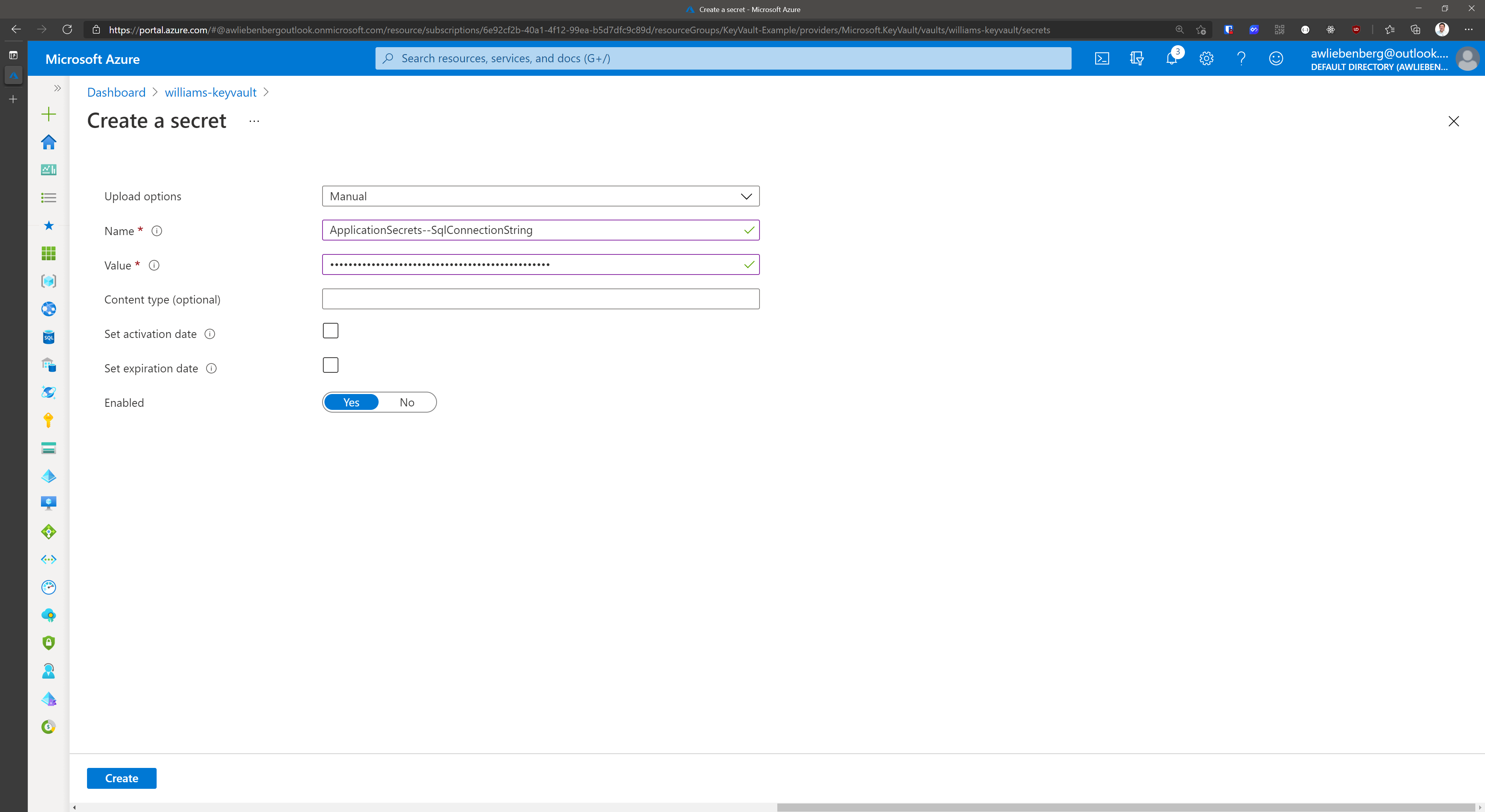

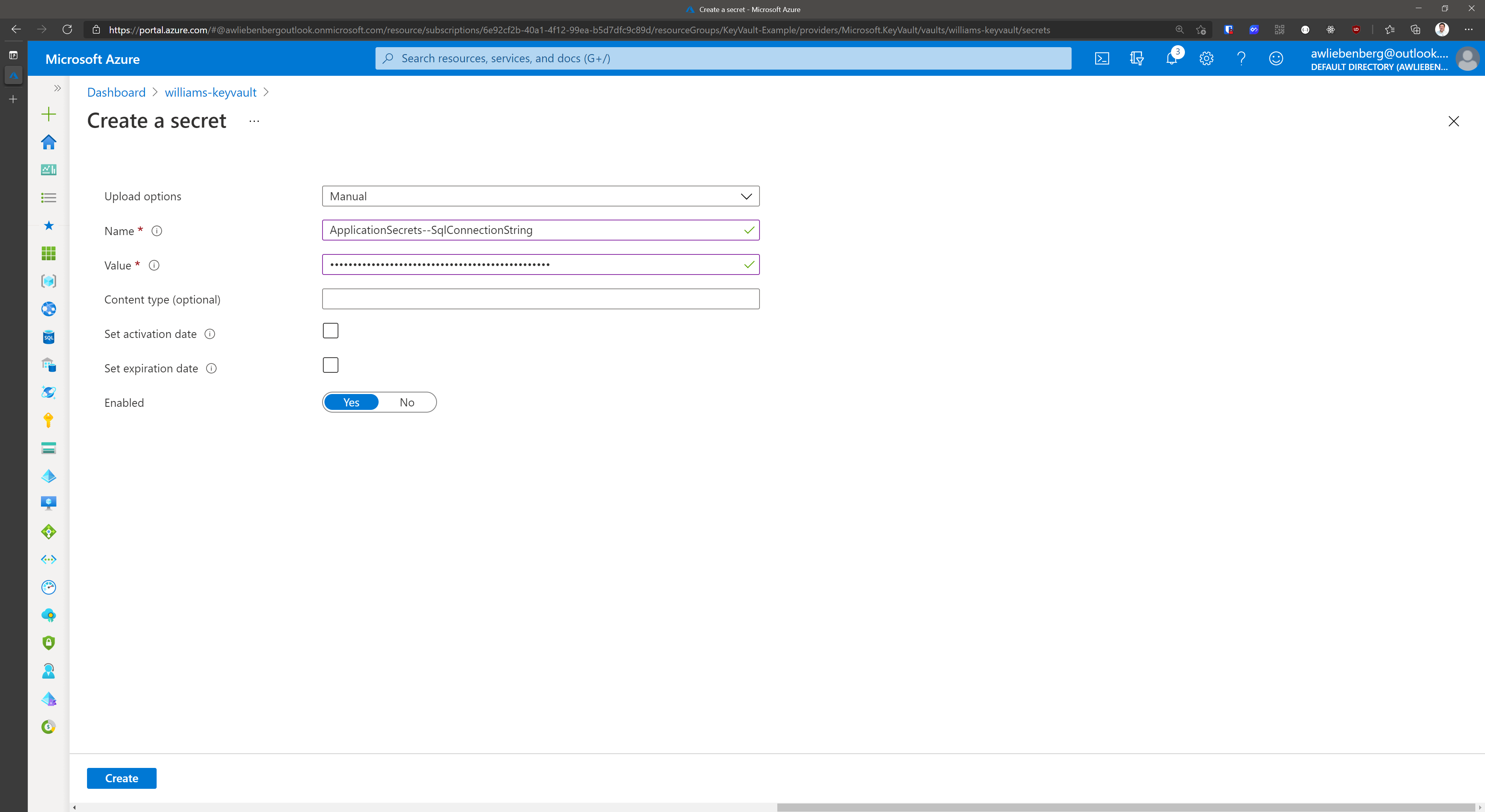

Adding secrets into Key Vault is easy.

- Create a new secret by clicking on the Generate/Import button

- Provide the name

- Provide the secret value

- Click Create

Figure: Creating the SqlConnectionString secret in Key Vault.

Figure: SqlConnectionString stored in Key Vault

Note: The ApplicationSecrets section is indicated by "ApplicationSecrets--" instead of "ApplicationSecrets:".

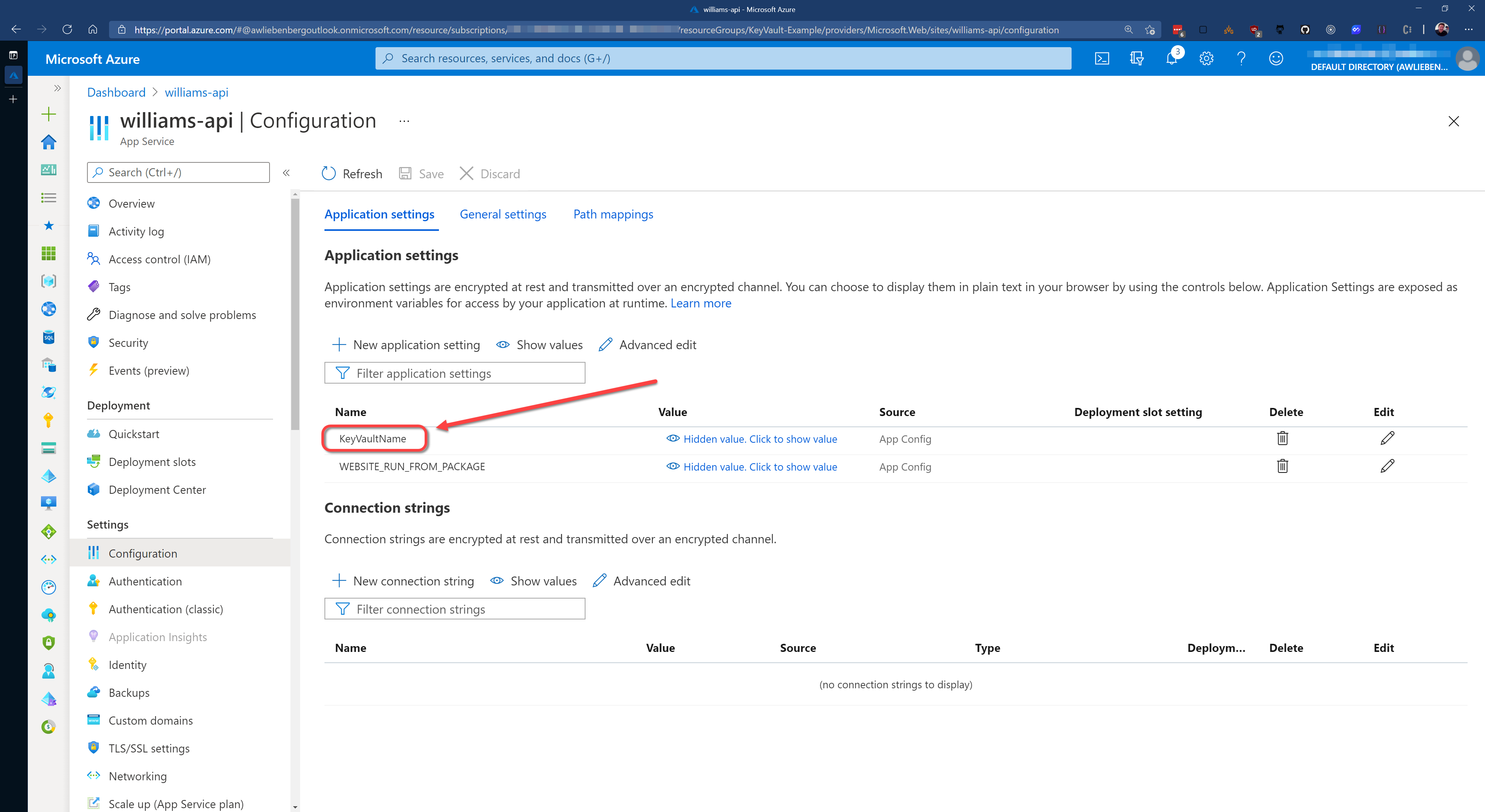

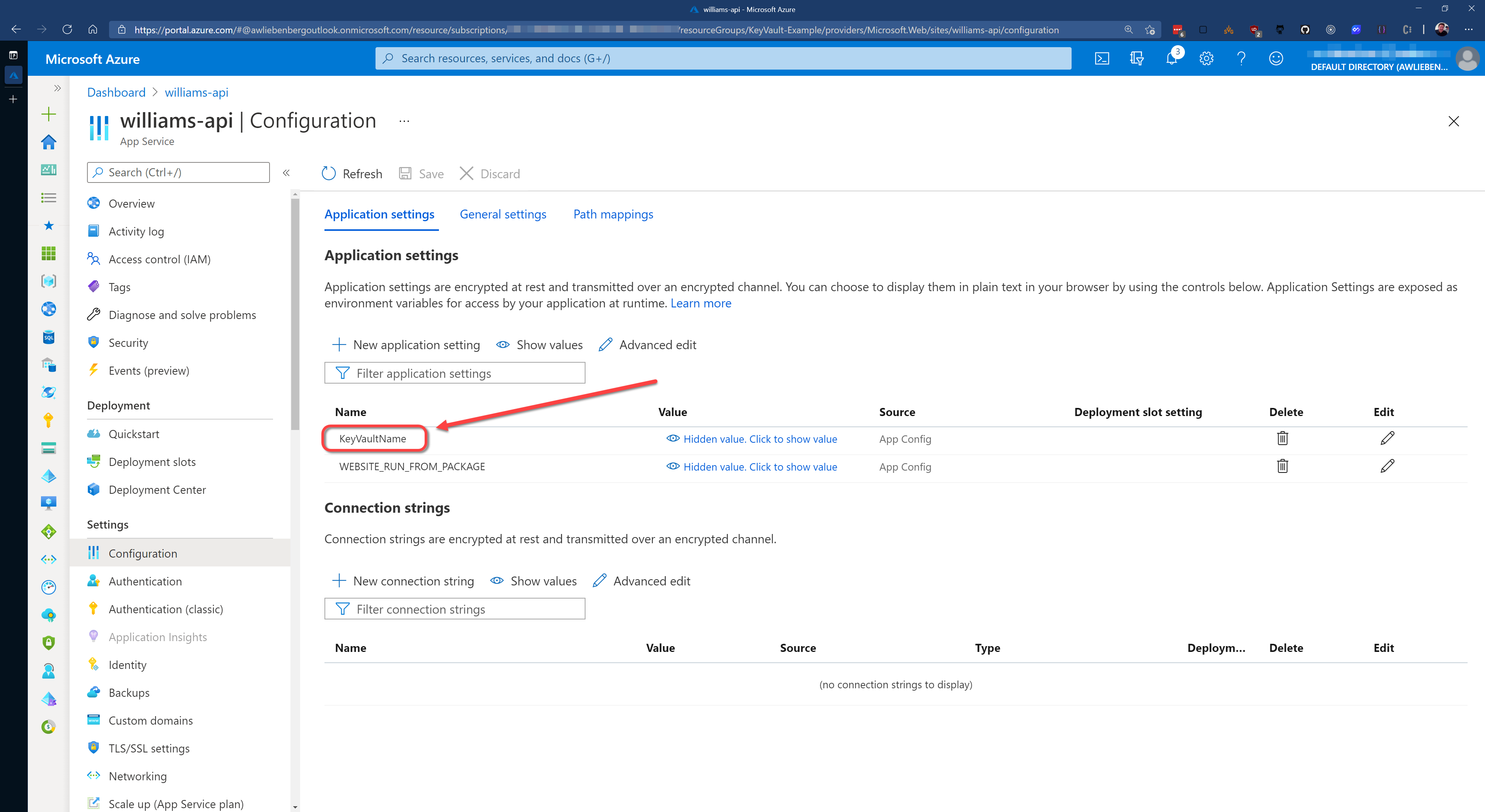

As a result of storing secrets in Key Vault, your Azure App Service configuration (app settings) will be nice and clean. You should not see any fields that contain passwords or keys. Only basic configuration values.

Figure: Your WebApp Configuration - No passwords or secrets, just a name of the Key vault that it needs to access

Video: Watch SSW's William Liebenberg explain Connection Strings and Key Vault in more detail (8 min)History of Connection Strings

In .NET 1.1 we used to store our connection string in a configuration file like this:

<configuration><appSettings><add key="ConnectionString" value ="integrated security=true;data source=(local);initial catalog=Northwind"/></appSettings></configuration>...and access this connection string in code like this:

SqlConnection sqlConn =new SqlConnection(System.Configuration.ConfigurationSettings.AppSettings["ConnectionString"]);❌ Figure: Historical example - Old ASP.NET 1.1 way, untyped and prone to error

In .NET 2.0 we used strongly typed settings classes:

Step 1: Setup your settings in your common project. E.g. Northwind.Common

Figure: Settings in Project Properties

Step 2: Open up the generated App.config under your common project. E.g. Northwind.Common/App.config

Step 3:

Copy the content into your entry applications app.config. E.g. Northwind.WindowsUI/App.configThe new setting has been updated to app.config automatically in .NET 2.0<configuration><connectionStrings><add name="Common.Properties.Settings.NorthwindConnectionString"connectionString="Data Source=(local);Initial Catalog=Northwind;Integrated Security=True"providerName="System.Data.SqlClient" /></connectionStrings></configuration>...then you can access the connection string like this in C#:

SqlConnection sqlConn =new SqlConnection(Common.Properties.Settings.Default.NorthwindConnectionString);❌ Figure: Historical example - Access our connection string by strongly typed generated settings class...this is no longer the best way to do it

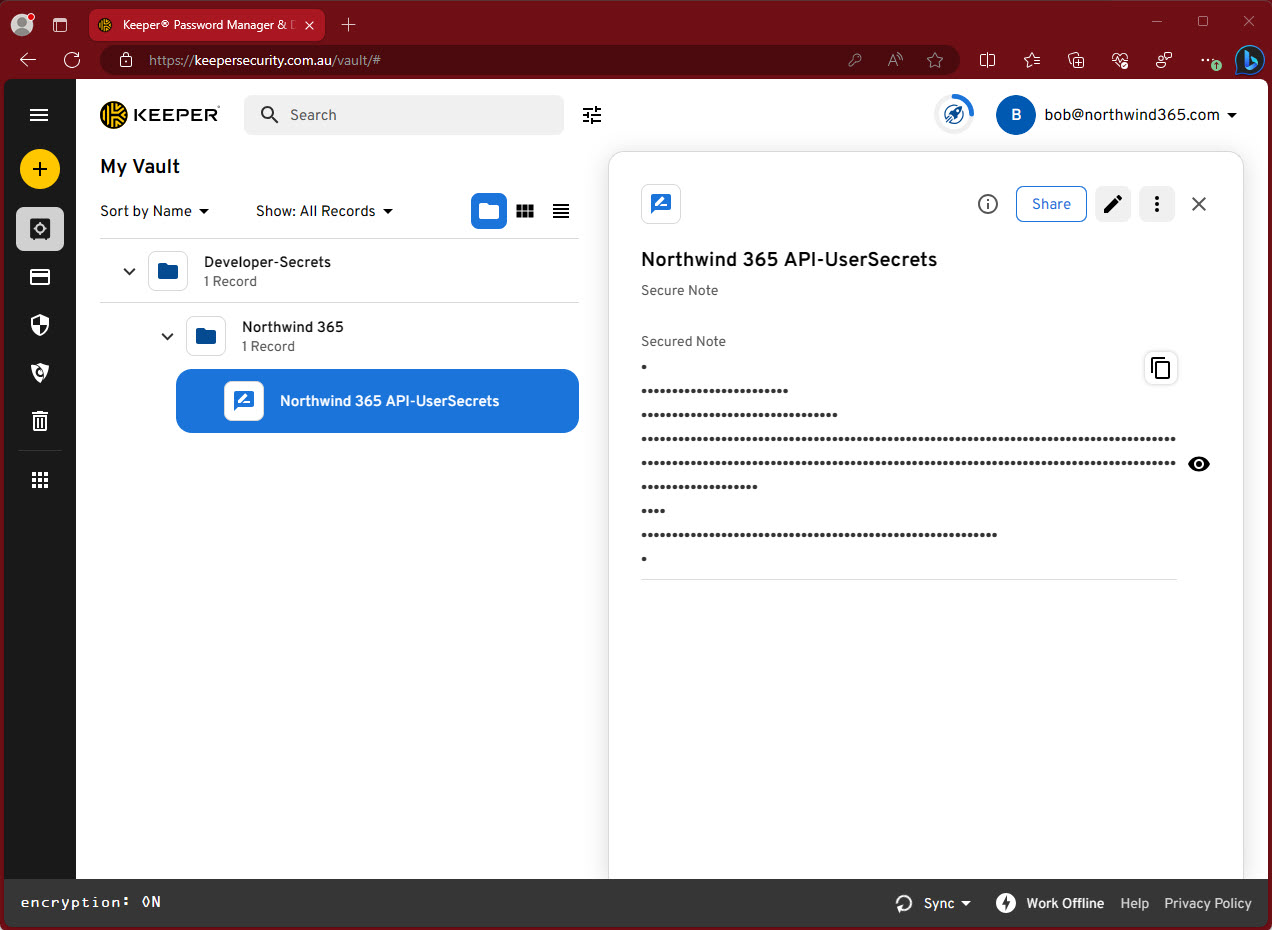

Do you store your secrets securely?

<introEmbed body={<> Most systems will have variables that need to be stored securely; OpenId shared secret keys, connection strings, and API tokens to name a few. These secrets **must not** be stored in source control. It is insecure and means they are sitting out in the open, wherever code has been downloaded, for anyone to see. </>} /> There are many options for managing secrets in a secure way: ### Bad Practices <asideEmbed variant="greybox" body={<> #### Store production passwords in source control Pros: * Minimal change to existing process * Simple and easy to understand Cons: * Passwords are readable by anyone who has either source code or access to source control * Difficult to manage production and non-production config settings * Developers can read and access the production password  </>} figureEmbed={{ preset: "badExample", figure: 'Bad practice - Overall rating: 1/10', shouldDisplay: true }} /> <asideEmbed variant="greybox" body={<> #### Store production passwords in source control protected with the [ASP.NET IIS Registration Tool](https://docs.microsoft.com/en-us/previous-versions/zhhddkxy(v=vs.140?WT.mc_id=DT-MVP-33518)?redirectedfrom=MSDN) Pros: * Minimal change to existing process – no need for [DPAPI](https://docs.microsoft.com/en-us/aspnet/core/security/data-protection/introduction?view=aspnetcore-5.0&WT.mc_id=ES-MVP-33518) or a dedicated Release Management (RM) tool * Simple and easy to understand Cons: * Need to manually give the app pool identity ability to read the default RSA key container * Difficult to manage production and non-production config settings * Developers can easily decrypt and access the production password * Manual transmission of the password from the key store to the encrypted config file </>} figureEmbed={{ preset: "badExample", figure: 'Bad practice - Overall rating: 2/10', shouldDisplay: true }} /> <asideEmbed variant="greybox" body={<> #### Use Windows Identity instead of username / password Pros: * Minimal change to existing process – no need for DPAPI or a dedicated RM tool * Simple and easy to understand Cons: * Difficult to manage production and non-production config settings * Not generally applicable to all secured resources * Can hit firewall snags with Kerberos and AD ports * Vulnerable to DOS attacks related to password lockout policies * Has key-person reliance on network admin </>} figureEmbed={{ preset: "badExample", figure: 'Bad practice - Overall rating: 4/10', shouldDisplay: true }} /> <asideEmbed variant="greybox" body={<> #### [Use External Configuration Files](https://docs.microsoft.com/en-us/aspnet/identity/overview/features-api/best-practices-for-deploying-passwords-and-other-sensitive-data-to-aspnet-and-azure?WT.mc_id=DT-MVP-33518) Pros: * Simple to understand and implement Cons: * Makes setting up projects the first time very hard * Easy to accidentally check the external config file into source control * Still need DPAPI to protect the external config file * No clear way to manage the DevOps process for external config files </>} figureEmbed={{ preset: "default", figure: 'XXX', shouldDisplay: false }} /> <figureEmbed figureEmbed={{ preset: "badExample", figure: 'Figure: Bad practice - Overall rating: 1/10', shouldDisplay: true } } /> ### Good Practices <asideEmbed variant="greybox" body={<> #### Use Octopus/ VSTS RM secret management, with passwords sourced from KeePass Pros: * Scalable and secure * General industry best practice - great for organizations of most sizes below large corporate Cons: * Password reset process is still manual * DPAPI still needed </>} figureEmbed={{ preset: "goodExample", figure: 'Good practice - Overall rating: 8/10', shouldDisplay: true }} /> <asideEmbed variant="greybox" body={<> #### Use Enterprise Secret Management Tool – Keeper, 1Password, LastPass, Hashicorp Vault, etc Pros: * Enterprise grade – supports cryptographically strong passwords, auditing of secret access and dynamic secrets * Supports hierarchy of secrets * API interface for interfacing with other tools * Password transmission can be done without a human in the chain Cons: * More complex to install and administer * DPAPI still needed for config files at rest </>} figureEmbed={{ preset: "goodExample", figure: 'Good practice - Overall rating: 8/10', shouldDisplay: true }} /> <asideEmbed variant="greybox" body={<> #### Use .NET User Secrets Pros: * Simple secret management for development environments * Keeps secrets out of version control Cons: * Not suitable for production environments </>} figureEmbed={{ preset: "goodExample", figure: 'Good practice - Overall rating 8/10', shouldDisplay: true }} /> <asideEmbed variant="greybox" body={<> #### Use Azure Key Vault See the [SSW Rewards](https://www.ssw.com.au/ssw/Rewards/) mobile app repository for how SSW is using this in a production application: <https://github.com/SSWConsulting/SSW.Rewards> Pros: * Enterprise grade * Uses industry standard best encryption * Dynamically cycles secrets * Access granted based on Azure AD permissions - no need to 'securely' share passwords with colleagues * Can be used to inject secrets in your CI/CD pipelines for non-cloud solutions * Can be used by on-premise applications (more configuration - see [Use Application ID and X.509 certificate for non-Azure-hosted apps](https://learn.microsoft.com/en-us/aspnet/core/security/key-vault-configuration?view=aspnetcore-7.0#use-application-id-and-x509-certificate-for-non-azure-hosted-apps&WT.mc_id=ES-MVP-33518)) Cons: * Tightly integrated into Azure so if you are running on another provider or on premises, this may be a concern. Authentication into Key Vault now needs to be secured. </>} figureEmbed={{ preset: "goodExample", figure: 'Good practice - Overall rating 9/10', shouldDisplay: true }} /> <asideEmbed variant="greybox" body={<> #### Avoid using secrets with Azure Managed Identities The easiest way to manage secrets is not to have them in the first place. Azure Managed Identities allows you to assign an Azure AD identity to your application and then allow it to use its identity to log in to other services. This avoids the need for any secrets to be stored. Pros: * Best solution for cloud (Azure) solutions * Enterprise grade * Access granted based on Azure AD permissions - no need to 'securely' share passwords with colleagues * Roles can be granted to your application your CI/CD pipelines at the time your services are deployed Cons: * Only works where Azure AD RBAC is available. NB. There are still some Azure services that don't yet support this. Most do though.  </>} figureEmbed={{ preset: "goodExample", figure: 'Good practice - Overall rating 10/10', shouldDisplay: true }} /> ### Resources The following resources show some concrete examples on how to apply the principles described: * [github.com/brydeno/bicepsofsteel](https://github.com/brydeno/bicepsofsteel) * [Microsoft Learn | Best practices for using Azure Key Vault](https://learn.microsoft.com/en-us/azure/key-vault/general/best-practices?WT.mc_id=DT-MVP-33518) * [Microsoft Learn | Azure Key Vault security](https://learn.microsoft.com/en-us/azure/key-vault/general/security-features?WT.mc_id=DT-MVP-33518) * [Microsoft Learn | Safe storage of app secrets in development in ASP.NET Core](https://learn.microsoft.com/en-us/aspnet/core/security/app-secrets?view=aspnetcore-5.0&tabs=windows&WT.mc_id=ES-MVP-33518) * [Microsoft Learn | Connection strings and configuration files](https://learn.microsoft.com/en-us/sql/connect/ado-net/connection-strings-and-configuration-files?view=sql-server-ver15&WT.mc_id=DT-MVP-33518) * [Microsoft Learn | Use managed identities to access App Configuration](https://learn.microsoft.com/en-us/azure/azure-app-configuration/howto-integrate-azure-managed-service-identity?tabs=core5x&pivots=framework-dotnet&WT.mc_id=AZ-MVP-33518) * [Stop storing your secrets with Azure Managed Identities | Bryden Oliver](https://www.youtube.com/watch?v=F9H0txgz0ns)Do you share your developer secrets securely?

You may be asking what's a secret for a development environment? A developer secret is any value that would be considered sensitive.

Most systems will have variables that need to be stored securely; OpenId shared secret keys, connection strings, and API tokens to name a few. These secrets must not be stored in source control. It's not secure and means they are sitting out in the open, wherever code has been downloaded, for anyone to see.

There are different ways to store your secrets securely. When you use .NET User Secrets, you can store your secrets in a JSON file on your local machine. This is great for development, but how do you share those secrets securely with other developers in your organization?

Video: Do you share secrets securely | Jeoffrey Fischer (7min)An encryption key or SQL connection string to a developer's local machine/container is a good example of something that will not always be sensitive for in a development environment, whereas a GitHub PAT token or Azure Storage SAS token would be considered sensitive as it allows access to company-owned resources outside of the local development machine.

❌ Bad practices

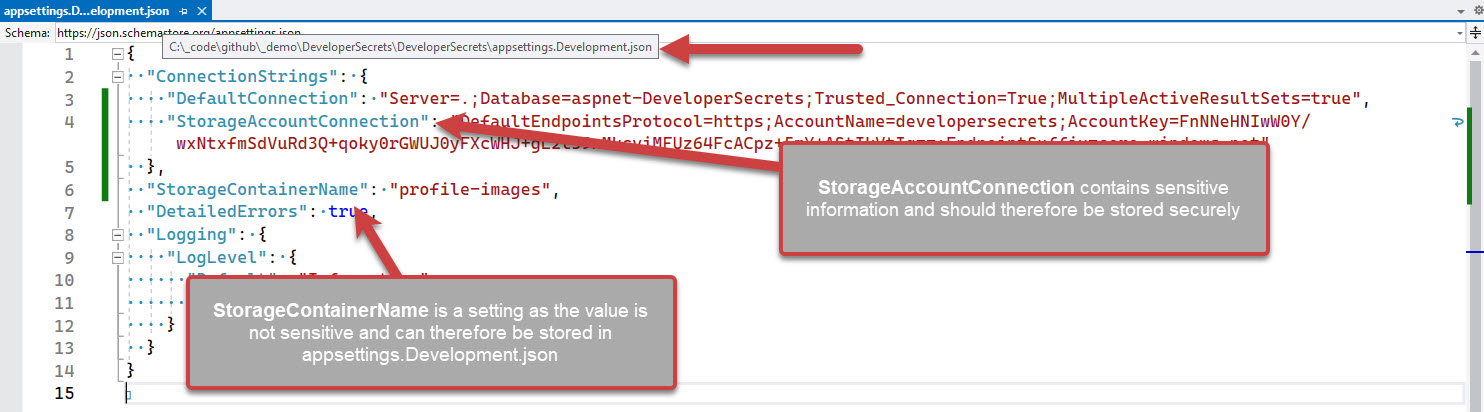

❌ Do not store secrets in appsettings.Development.json

The

appsettings.Development.jsonfile is meant for storing development settings. It is not meant for storing secrets. This is a bad practice because it means that the secrets are stored in source control, which is not secure.

❌ Figure: Bad practice - Overall rating: 1/10

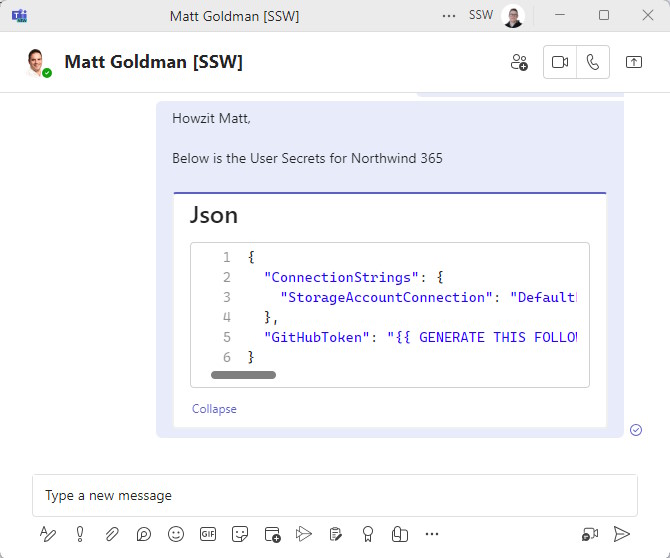

❌ Do not share secrets via email/Microsoft Teams

Sending secrets over Microsoft Teams is a terrible idea, the messages can land up in logs, but they are also stored in the chat history. Developers can delete the messages once copied out, although this extra admin adds friction to the process and is often forgotten.

Note: Sending the secrets in email, is less secure and adds even more admin for trying to remove some of the trace of the secret and is probably the least secure way of transferring secrets.

❌ Figure: Bad practice - Overall rating: 3/10

✅ Good practices

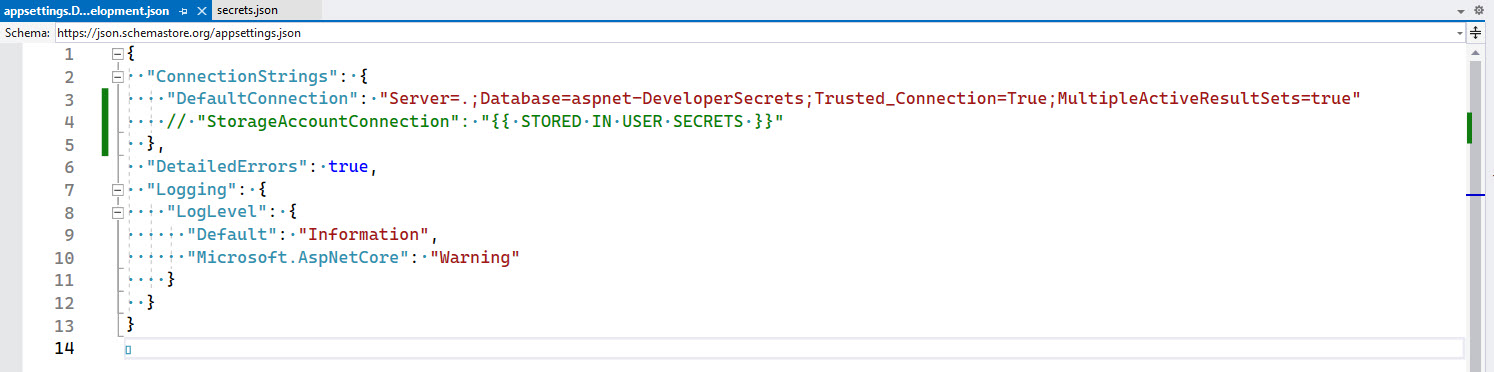

✅ Remind developers where the secrets are for a project

For development purposes once you are using .NET User Secrets you will still need to share them with other developers on the project.

<imageEmbed alt="Image" size="large" showBorder={false} figureEmbed={{ preset: "default", figure: 'User Secrets are stored outside the development folder', shouldDisplay: true }} src="/uploads/rules/share-your-developer-secrets-securely/user-secrets.jpg" />As a way of giving a heads up to other developers on the project, you can add a step in your

_docs\Instructions-Compile.mdfile (see rule on making awesome documentation) to inform developers to get a copy of the user secrets. You can also add a placeholder to theappsettings.Development.jsonfile to remind developers to add the secrets.

✅ Figure: Good practice - Remind developers where the secrets are for this project

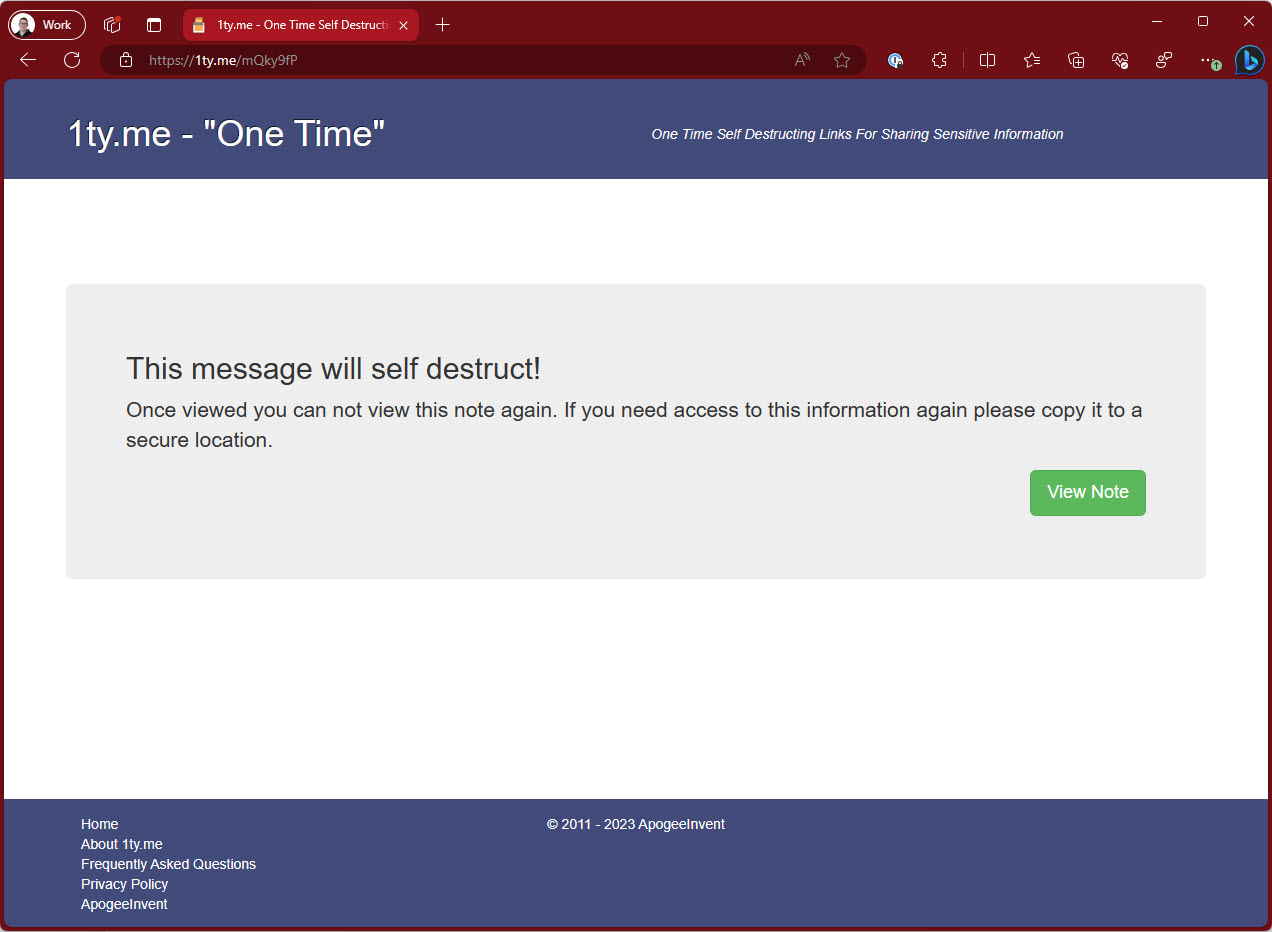

✅ Use 1ty.me to share secrets securely

Using a site like 1ty.me allows you to share secrets securely with other developers on the project.

Pros:

- Simple to share secrets

- Free

Cons:

- Requires a developer to have a copy of the

secrets.jsonfile already - Developers need to remember to add placeholders for developer specific secrets before sharing

- Access Control - Although the link is single use, there's no absolute guarantee that the person opening the link is authorized to do so

✅ Figure: Good practice - Overall rating 8/10

✅ Use Azure Key Vault

Azure Key Vault is a great way to store secrets securely. It is great for production environments, although for development purposes it means you would have to be online at all times.

Pros:

- Enterprise grade

- Uses industry standard best encryption

- Dynamically cycles secrets

- Access Control - Access granted based on Azure AD permissions - no need to 'securely' share passwords with colleagues

Cons:

- Not able to configure developer specific secrets

- No offline access

- Tightly integrated into Azure so if you are running on another provider or on premises, this may be a concern

- Authentication into Key Vault requires Azure service authentication, which isn't supported in every IDE

✅ Figure: Good practice - Overall rating 8/10

✅ (Recommended) Use Enterprise Secret Management Tool – Keeper, 1Password, LastPass, Hashicorp Vault, etc

Enterprise Secret Management tools have are great for storing secrets for various systems across the whole organization. This includes developer secrets

Pros:

- Developers don't need to call other developers to get secrets

- Placeholders can be placed in the stored secrets

- Access Control - Only developers who are authorized to access the secrets can do so

Cons:

- More complex to install and administer

- Paid Service

✅ Figure: Good practice - Overall rating 10/10

Tip: You can store the full

secrets.jsonfile contents in the enterprise secrets management tool.Most enterprise secrets management tool have the ability to retrieve the secrets via an API, with this you could also store the

UserSecretIdin a field and create a script that updates the secrets easily into the correctsecrets.jsonfile on your development machine.Do you avoid clear text email addresses in web pages?

Clear text email addresses in web pages are very dangerous because it gives spam sender a chance to pick up your email address, which produces a lot of spam/traffic to your mail server, this will cost you money and time to fix.

Never put clear text email addresses on web pages.

<!--SSW Code Auditor - Ignore next line(HTML)--><a href="mailto:test@ssw.com.au">Contact Us</a>❌ Figure: Bad - Using a plain email address that it will be crawled and made use of easily

<a href="javascript:sendEmail('74657374407373772e636f6d2e6175')" onmouseover="javascript:displayStatus('74657374407373772e636f6d2e6175');return true;" onmouseout="javascript:clearStatus(); return true;">Contact Us</a>✅ Figure: Good - Using an encoded email address

Tip: If you use Wordpress, use the Email Encoder Bundle plugin to help you encode email addresses easily.

We have a program called SSW CodeAuditor to check for this rule.

Do you always create suggestions when something is hard to do?

One of our goals is to make the job of the developer as easy as possible. If you have to write a lot of code for something that you think you should not have to do, you should make a suggestion and add it to the relevant page.

If you have to add a suggestion, make sure that you put the link to that suggestion into the comments of your code.

/// <summary>/// base class for command implementations/// This is a work around as standard MVVM commands/// are not provided by default./// </summary>public class Command : ICommand{// code}❌ Figure: Figure: Bad example - The link to the suggestion should be in the comments

/// <summary>/// base class for command implementations/// This is a work around as standard MVVM commands/// are not provided by default./// </summary>////// <remarks>/// Issue Logged here: https://github.com/SSWConsulting/SSW.Rules/issues/3///</remarks>public class Command : ICommand{// code}✅ Figure: Figure: Good example - When you link to a suggestion everyone can find it and vote it up

Do you avoid casts and use the "as operator" instead?

Use casts only if: a. You know 100% that you get that type back b. You want to perform a user-defined conversion

private void AMControlMouseLeftButtonUp(object sender, MouseButtonEventArgs e){var auc = (AMUserControl)sender;var aucSessionId = auc.myUserControl.Tag;// snip snip snip}❌ Figure: Bad example

private void AMControlMouseLeftButtonUp(object sender, MouseButtonEventArgs e){var auc = sender as AMUserControl;if (auc != null){var aucSessionId = auc.myUserControl.Tag;// snip snip snip}}✅ Figure: Good example

More info here: http://blog.gfader.com/2010/08/avoid-type-casts-use-operator-and-check.html

Do you avoid Empty code blocks?

Empty Visual C# .NET methods consume program resources unnecessarily. Put a comment in code block if its stub for future application. Don’t add empty C# methods to your code. If you are adding one as a placeholder for future development, add a comment with a TODO.

Also, to avoid unnecessary resource consumption, you should keep the entire method commented until it has been implemented.

If the class implements an inherited interface method, ensure the method throws NotImplementedException.

public class Example{public double salary(){}}❌ Figure: Figure: Bad Example - Method is empty

public class Sample{public double salary(){return 2500.00;}}✅ Figure: Figure: Good Example - Method implements some code

public interface IDemo{void DoSomethingUseful();void SomethingThatCanBeIgnored();}public class Demo : IDemo{public void DoSomethingUseful(){// no audit issuesConsole.WriteLine("Useful");}// audit issuespublic void SomethingThatCanBeIgnored(){}}❌ Figure: Figure: Bad Example - No Comment within empty code block

public interface IDemo{void DoSomethingUseful();void SomethingThatCanBeIgnored();}public class Demo : IDemo{public void DoSomethingUseful(){// no audit issuesConsole.WriteLine("Useful");}// No audit issuespublic void SomethingThatCanBeIgnored(){// stub for IDemo interface}}✅ Figure: Figure: Good Example - Added comment within Empty Code block method of interface class

Do you avoid logic errors by using Else If?

We see a lot of programmers doing this, they have two conditions - true and false - and they do not consider other possibilities - e.g. an empty string. Take a look at this example. We have an If statement that checks what backend database is being used.

In the example the only expected values are "Development" and "Production".

void Load(string environment){if (environment == "Development"){// set Dev environment variables}else{// set Production environment variables}}❌ Figure: Figure: Bad example with If statement

Consider later that extra environments may be added: e.g. "Staging"

By using the above code, the wrong code will run because the above code assumes two possible situations. To avoid this problem, change the code to be defensive .g. Use an Else If statement (like below).

Now the code will throw an exception if an unexpected value is provided.

void Load(string environment){if (environment == "Development"){// set Dev environment variables}else if (environment == "Production"){// set Production environment variables}else{throw new InvalidArgumentException(environment);}}✅ Figure: Figure: Good example with If statement

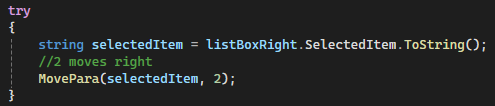

Do you avoid putting business logic into the presentation layer?

Be sure you are aware of what is business logic and what isn't. Typically, looping code will be placed in the business layer. This ensures that no redundant code is written and other projects can reference this logic as well.

private void btnOK_Click(object sender, EventArgs e){rtbParaText.Clear();var query =from p in dc.GetTable()select p.ParaID;foreach (var result in query){var query2 =from t in dc.GetTable()where t.ParaID == resultselect t.ParaText;rtbParaText.AppendText(query2.First() + "\r\n");}}❌ Figure: Bad Example: A UI method mixed with business logics

private void btnOK_Click(object sender, EventArgs e){string paraText = Business.GetParaText();rtbParaText.Clear();rtbParaText.Add(paraText);}✅ Figure: Good Example : Putting business logics into the business project, just call the relevant method when needed

Do you avoid "UI" in event names?

No "UI" in event names, the event raiser should be unaware of the UI in MVVM and user controls The handler of the event should then do something on the UI.

private void RaiseUIUpdateBidButtonsRed() {if (UIUpdateBidButtonsRed != null) {UIUpdateBidButtonsRed();}}❌ Figure: Bad example: Avoid "UI" in event names, an event is UI un-aware

private void RaiseSelectedLotUpdated() {if (SelectedLotUpdated != null) {SelectedLotUpdated();}}✅ Figure: Good example: When receiving an update on the currently selected item, change the UI correspondingly (or even better: use MVVM and data binding)

Do you know when to use switch statement instead of if-else?

The .NET framework and the C# language provide two methods for conditional handling where multiple distinct values can be selected from. The

switchstatement is less flexible than theif-else-iftree but is generally considered to be more efficient.The .NET compiler generates a jump list for switch blocks, resulting in far better performance than if/else for evaluating conditions. The performance gains are negligible when the number of conditions is trivial (i.e. fewer than 5), so if the code is clearer and more maintainable using if/else blocks, then you can use your discretion. But be prepared to refactor to a switch block if the number of conditions exceeds 5.

int DepartmentId = GetDepartmentId()if(DepartmentId == 1){// do something}else if(DepartmentId == 2){// do something #2}else if(DepartmentId == 3){// do something #3}else if(DepartmentId == 4){// do something #4}else if(DepartmentId == 5){// do something #5}else{// do something #6}❌ Figure: Figure: Bad example of coding practice

int DepartmentId = GetDepartmentId()switch(DepartmentId){case 1:// do somethingbreak;case 2:// do something # 2break;case 3:// do something # 3break;case 4:// do something # 4break;case 5:// do something # 5break;case 6:// do something # 6break;default://Do something herebreak;}✅ Figure: Figure: Good example of coding practice which will result better performance

In situation where your inputs have a very skewed distribution,

if-else-ifcould outperformswitchstatement by offering a fast path. Ordering yourifstatement with the most frequent condition first will give priority to tests upfront, whereasswitchstatement will test all cases with equal priority.Do you avoid validating XML documents unnecessarily?

Validating an XML document against a schema is expensive, and should not be done where it is not absolutely necessary. Combined with weight the XML document object, validation can cause a significant performance hit:

- Read with XmlValidatingReader: 172203 nodes - 812 ms

- Read with XmlTextReader: 172203 nodes - 320 ms

- Parse using XmlDocument no validation - length 1619608 - 1052 ms

- Parse using XmlDocument with XmlValidatingReader: length 1619608 - 1862 ms

You can disable validation when using the XmlDocument object by passing an XmlTextReader instead of the XmlValidatingTextReader:

XmlDocument report = new XmlDocument();XmlTextReader tr = new XmlTextReader(Configuration.LastReportPath);report.Load(tr);To perform validation:

XmlDocument report = new XmlDocument();XmlTextReader tr = new XmlTextReader(Configuration.LastReportPath);XmlValidatingReader reader = new XmlValidatingReader(tr);report.Load(reader);The XSD should be distributed in the same directory as the XML file and a relative path should be used:

<Report> <Report xmlns="LinkAuditorReport.xsd">... </Report>Do you change the connection timeout to 5 seconds?

By default, the connection timeout is 15 seconds. When it comes to testing if a connection is valid or not, 15 seconds is a long time for the user to wait. You should change the connection timeout inside your connection strings to 5 seconds.

"Integrated Security=SSPI;Initial Catalog=SallyKnoxMedical;Data Source=TUNA"❌ Figure: Figure: Bad Connection String

"Integrated Security=SSPI;Initial Catalog=SallyKnoxMedical;Data Source=TUNA;Connect Timeout=5"✅ Figure: Figure: Good Connection String with a 5-second connection timeout

Do you declare member accessibility for all classes?

Not explicitly specifying the access type for members of a structure or class can be misleading for other developers. The default member accessibility level for classes and structs in Visual C# .NET is always private. In Visual Basic .NET, the default for classes is private, but for structs is public.

Match MatchExpression(string input, string pattern)❌ Figure: Figure: Bad - Method without member accessibility declared

private Match MatchExpression(string input, string pattern)✅ Figure: Figure: Good - Method with member accessibility declared

Figure: Compiler warning given for not explicitly defining member access level

We have a program called SSW Code Auditor to check for this rule.

Do you do your validation with Return?

The return statement can be very useful when used for validation filtering.

Instead of a deep nested If, use Return to provide a short execution path for conditions which are invalid.

private void AssignRightToLeft(){// Validate Rightif (paraRight.SelectedIndex >= 0){// Validate Leftif (paraLeft.SelectedIndex >= 0){string paraId = paraRight.SelectedValue.ToString();Paragraph para = new Paragraph();para.MoveRight(paraId);RefreshData();}}}❌ Figure: Figure: Bad example - Using nested if for validation

private void AssignRightToLeft(){// Validate Rightif (paraRight.SelectedIndex < 0){return;}// Validate Leftif (paraLeft.SelectedIndex < 0){return;}string paraId = paraRight.SelectedValue.ToString();Paragraph para = new Paragraph();para.MoveRight(paraId);RefreshData();}✅ Figure: Figure: Good example - Using Return to exit early if invalid

Do you expose events as events?

You should expose events as events.

public Action< connectioninformation > ConnectionProblem;❌ Figure: Bad code

public event Action< connectioninformation > ConnectionProblem;✅ Figure: Good code

Do you follow the boy scout rule?

This rule is inspired by a piece from Robert C. Martin (Uncle Bob) where he identifies an age old boys scouts rule could be used by software developers to constantly improve a codebase.

Uncle Bob proposed the original rule...

Always leave the campground cleaner than you found it.

...be changed to

Always leave the code you've worked on cleaner than you found it.

The reasoning being that no matter how good of a software developer we are, over time, smells creep into code. Be it from tight deadlines, old code that has been changed or appended to in insolation 100's of times over years or just or just newer & better ways of doing things become available.

So each time you touch some code, leave it just a little cleaner than the way you found it.

Here are some simple examples of how you can leave your

campsitecode cleaner:- Remove a compiler warning

- Remove unused code

- Improve variable/method naming to make it clearer

- DRY out some code

- Restructure a code block to make it more readable

- Add a test for a missing use case

Do you follow naming conventions for your Boolean Property?

Boolean Properties must be prefixed by a verb. Verbs like "Supports", "Allow", "Accept", "Use" should be valid. Also properties like "Visible", "Available" should be accepted (maybe not). See how to name Boolean columns in SQL databases.

public bool Enable { get; set; }public bool Invoice { get; set; }❌ Figure: Bad example - Not using naming convention for Boolean Property

public bool Enabled { get; set; }public bool IsInvoiceSent { get; set; }✅ Figure: Good example - Using naming convention for Boolean Property

Naming Boolean state Variables in Frontend code

When it comes to state management in frameworks like Angular or React, a similar principle applies, but with a focus on the continuity of the action.

For instance, if you are tracking a process or a loading state, the variable should reflect the ongoing nature of these actions. Instead of "isLoaded" or "isProcessed," which suggest a completed state, use names like "isLoading" or "isProcessing."

These names start as false, change to true while the process is ongoing, and revert to false once completed.

const [isLoading, setIsLoading] = useState(false); // Initial state: not loadingNote: When an operation begins, isLoading is set to true, indicating the process is active. Upon completion, it's set back to false.

This naming convention avoids confusion, such as a variable named isLoaded that would be true before the completion of a process and then false, which is counterintuitive and misleading.

We have a program called SSW CodeAuditor to check for this rule.

Do you format "Environment.NewLine" at the end of a line?

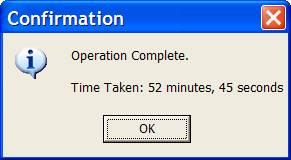

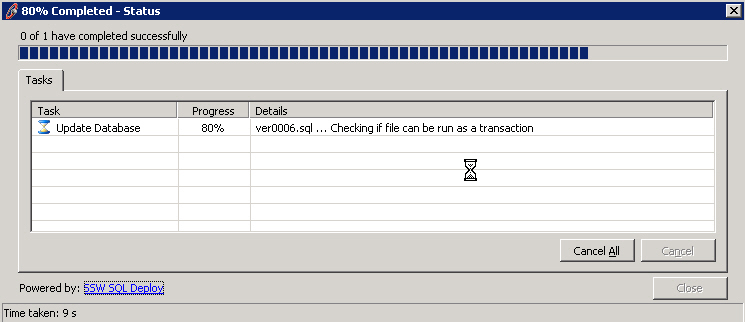

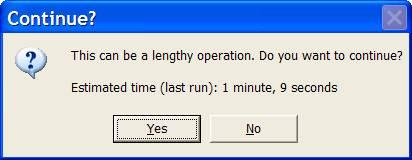

<introEmbed body={<> You should format "Environment.NewLine" at the end of a line. </>} /> ```csharp string message = "The database is not valid." + Environment.NewLine + "Do you want to upgrade it? "; ``` <figureEmbed figureEmbed={{ preset: "badExample", figure: 'Bad example - "Environment.NewLine" isn\'t at the end of the line', shouldDisplay: true } } /> ```csharp string message = "The database is not valid." + Environment.NewLine; message += "Do you want to upgrade it? "; ``` <figureEmbed figureEmbed={{ preset: "goodExample", figure: 'Good example - "Environment.NewLine" is at the end of the line', shouldDisplay: true } } /> ```csharp return string.Join(Environment.NewLine, paragraphs); ``` <figureEmbed figureEmbed={{ preset: "goodExample", figure: 'Good example - "Environment.NewLine" is an exception for String.Join\', shouldDisplay: true } } />Do you have the time taken in the status bar?

This feature is Particularly important if the user runs a semi-long task (e.g.30 seconds) once a day. Only at the end of the long process can they know the particular amount of time, if the time taken dialog is shown after the finish. If the status bar contains the time taken and the progress bar contains the progress percentage, they can evaluate how long it will take according to the time taken and percentage. Then they can switch to other work or go get a cup of coffee.

Also a developer, you can use it to know if a piece of code you have modified has increased the performance of the task or hindered it.

❌ Figure: Bad example - popup dialog at the end of a long process

✅ Figure: Good example - show time taken in the status bar

Do you import namespaces and shorten the references?

You should import namespaces and shorten the references.

System.Text.StringBuilder myStringBuilder = new System.Text.StringBuilder();❌ Figure: Figure: Bad code - Long reference to object name

using System.Text;......StringBuilder myStringBuilder = new StringBuilder();✅ Figure: Figure: Good code - Import the namespace and remove the repeated System.Text reference

If you have ReSharper installed, you can let ReSharper take care of this for you:

Figure: Right click and select "Reformat Code..."

Figure: Make sure "Shorten references" is checked and click "Reformat"

Do you initialize variables outside of the try block?

You should initialize variables outside of the try block.

Cursor cur;try {// ...cur = Cursor.Current; //Bad Code - initializing the variable inside the try blockCursor.Current = Cursors.WaitCursor;// ...} finally {Cursor.Current = cur;}❌ Figure: Bad Example: Because of the initializing code inside the try block. If it failed on this line then you will get a NullReferenceException in Finally

Cursor cur = Cursor.Current; //Good Code - initializing the variable outside the try blocktry {// ...Cursor.Current = Cursors.WaitCursor;// ...} finally {Cursor.Current = cur;}✅ Figure: Good Example : Because the initializing code is outside the try block

Do you know that Enum types should not be suffixed with the word "Enum"?

This is against the .NET Object Naming Conventions and inconsistent with the framework.

Public Enum ProjectLanguageEnum CSharp VisualBasic End Enum❌ Figure: Bad example - Enum type is suffixed with the word "Enum"

Public Enum ProjectLanguage CSharp VisualBasic End Enum✅ Figure: Good example - Enum type is not suffixed with the word "Enum"

We have a program called SSW Code Auditor to check for this rule.

Do you know how to use Connection Strings?

There are 2 type of connection strings. The first contains only address type information without authorization secrets. These can use all of the simpler methods of storing configuration as none of this data is secret.

Option 1 - Using Azure Managed Identities (Recommended)

When deploying an Azure hosted application we can use Azure Managed Identities to avoid having to include a password or key inside our connection string. This means we really just need to keep the address or url to the service in our application configuration. Because our application has a Managed Identity, this can be treated in the same way as a user's Azure AD identity and specific roles can be assigned to grant the application access to required services.

This is the preferred method wherever possible, because it eliminates the need for any secrets to be stored. The other advantage is that for many services the level of access control available using Managed Identities is much more granular making it much easier to follow the Principle of Least Privilege.

Option 2 - Connection Strings with passwords or keys

If you have to use some sort of secret or key to login to the service being referenced, then some thought needs to be given to how those secrets can be secured. Take a look at Do you store your secrets securely to learn how to keep your secrets secure.

Example - Integrating Azure Key Vault into your ASP.NET Core application

In .NET 5 we can use Azure Key Vault to securely store our connection strings away from prying eyes.

Azure Key Vault is great for keeping your secrets secret because you can control access to the vault via Access Policies. The access policies allows you to add Users and Applications with customized permissions. Make sure you enable the System assigned identity for your App Service, this is required for adding it to Key Vault via Access Policies.

You can integrate Key Vault directly into your ASP.NET Core application configuration. This allows you to access Key Vault secrets via

IConfiguration.public static IHostBuilder CreateHostBuilder(string[] args) =>Host.CreateDefaultBuilder(args).ConfigureWebHostDefaults(webBuilder =>{webBuilder.UseStartup<Startup>().ConfigureAppConfiguration((context, config) =>{// To run the "Production" app locally, modify your launchSettings.json file// -> set ASPNETCORE_ENVIRONMENT value as "Production"if (context.HostingEnvironment.IsProduction()){IConfigurationRoot builtConfig = config.Build();// ATTENTION://// If running the app from your local dev machine (not in Azure AppService),// -> use the AzureCliCredential provider.// -> This means you have to log in locally via `az login` before running the app on your local machine.//// If running the app from Azure AppService// -> use the DefaultAzureCredential provider//TokenCredential cred = context.HostingEnvironment.IsAzureAppService() ?new DefaultAzureCredential(false) : new AzureCliCredential();var keyvaultUri = new Uri($"https://{builtConfig["KeyVaultName"]}.vault.azure.net/");var secretClient = new SecretClient(keyvaultUri, cred);config.AddAzureKeyVault(secretClient, new KeyVaultSecretManager());}});});✅ Figure: Good example - For a complete example, refer to this [sample application](https://github.com/william-liebenberg/keyvault-example)

Tip: You can detect if your application is running on your local machine or on an Azure AppService by looking for the

WEBSITE_SITE_NAMEenvironment variable. If null or empty, then you are NOT running on an Azure AppService.public static class IWebHostEnvironmentExtensions{public static bool IsAzureAppService(this IWebHostEnvironment env){var websiteName = Environment.GetEnvironmentVariable("WEBSITE_SITE_NAME");return string.IsNullOrEmpty(websiteName) is not true;}}Setting up your Key Vault correctly

In order to access the secrets in Key Vault, you (as User) or an Application must have been granted permission via a Key Vault Access Policy.

Applications require at least the LIST and GET permissions, otherwise the Key Vault integration will fail to retrieve secrets.

Figure: Key Vault Access Policies - Setting permissions for Applications and/or Users

Azure Key Vault and App Services can easily trust each other by making use of System assigned Managed Identities. Azure takes care of all the complicated logic behind the scenes for these two services to communicate with each other - reducing the complexity for application developers.

So, make sure that your Azure App Service has the System assigned identity enabled.

Once enabled, you can create a Key Vault Access policy to give your App Service permission to retrieve secrets from the Key Vault.

Figure: Enabling the System assigned identity for your App Service - this is required for adding it to Key Vault via Access Policies

Adding secrets into Key Vault is easy.

- Create a new secret by clicking on the Generate/Import button

- Provide the name

- Provide the secret value

- Click Create

Figure: Creating the SqlConnectionString secret in Key Vault.

Figure: SqlConnectionString stored in Key Vault

Note: The ApplicationSecrets section is indicated by "ApplicationSecrets--" instead of "ApplicationSecrets:".

As a result of storing secrets in Key Vault, your Azure App Service configuration (app settings) will be nice and clean. You should not see any fields that contain passwords or keys. Only basic configuration values.

Figure: Your WebApp Configuration - No passwords or secrets, just a name of the Key vault that it needs to access

Video: Watch SSW's William Liebenberg explain Connection Strings and Key Vault in more detail (8 min)History of Connection Strings

In .NET 1.1 we used to store our connection string in a configuration file like this:

<configuration><appSettings><add key="ConnectionString" value ="integrated security=true;data source=(local);initial catalog=Northwind"/></appSettings></configuration>...and access this connection string in code like this:

SqlConnection sqlConn =new SqlConnection(System.Configuration.ConfigurationSettings.AppSettings["ConnectionString"]);❌ Figure: Historical example - Old ASP.NET 1.1 way, untyped and prone to error

In .NET 2.0 we used strongly typed settings classes:

Step 1: Setup your settings in your common project. E.g. Northwind.Common

Figure: Settings in Project Properties

Step 2: Open up the generated App.config under your common project. E.g. Northwind.Common/App.config

Step 3:

Copy the content into your entry applications app.config. E.g. Northwind.WindowsUI/App.configThe new setting has been updated to app.config automatically in .NET 2.0<configuration><connectionStrings><add name="Common.Properties.Settings.NorthwindConnectionString"connectionString="Data Source=(local);Initial Catalog=Northwind;Integrated Security=True"providerName="System.Data.SqlClient" /></connectionStrings></configuration>...then you can access the connection string like this in C#:

SqlConnection sqlConn =new SqlConnection(Common.Properties.Settings.Default.NorthwindConnectionString);❌ Figure: Historical example - Access our connection string by strongly typed generated settings class...this is no longer the best way to do it

Do you know what to do with a work around?

<introEmbed body={<> If you have to use a workaround you should always comment your code. In your code add comments with: </>} /> 1. The pain - In the code add a URL to the existing resource you are following e.g. a blog post 2. The potential solution - Search for a suggestion on the product website. If there isn't one, create a suggestion to the product team that points to the resource. e.g. on <https://uservoice.com/> or <https://bettersoftwaresuggestions.com/> <asideEmbed variant="greybox" body={<> "This is a workaround as per the suggestion \[URL]" </>} figureEmbed={{ preset: "default", figure: 'Always add a URL to the suggestion that you are compensating for', shouldDisplay: true }} /> ### Exercise: Understand commenting You have just added a grid that auto updates, but you need to disable all the timers when you click the edit button. You have found an article on Code Project (<http://www.codeproject.com/Articles/39194/Disable-a-timer-at-every-level-of-your-ASP-NET-con.aspx>) and you have added the work around. Now what do you do? ```cs protected override void OnPreLoad(EventArgs e) { //Fix for pages that allow edit in grids this.Controls.ForEach(c => { if (c is System.Web.UI.Timer) { c.Enabled = false; } }); base.OnPreLoad(e); } ``` **Figure: Work around code in the Page Render looks good. The code is done, something is missing**Do you know when to use named parameters?

Named parameters have always been there for VB developers and in C# 4.0, C# developers finally get this feature.

You should use named parameters under these scenarios:

- When there are 4 or more parameters

- When you make use of optional parameters

- If it makes more sense to order the parameters a certain way

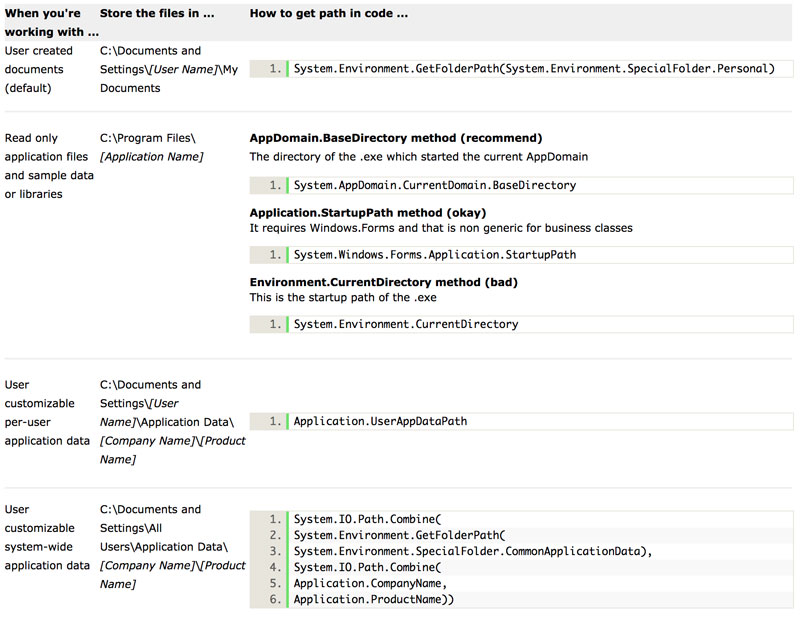

Do you know where to store your application's files?

Although many have differing opinions on this matter, Windows applications have standard storage locations for their files, whether they're settings or user data. Some will disagree with those standards, but it's safe to say that following it regardless will give users a more consistent and straightforward computing experience.

The following grid shows where application files should be placed:

Further Information

- The System.Environment class provides the most general way of retrieving those paths

- The Application class lives in the System.Windows.Form namespace, which indicates it should only be used for WinForm applications. Other types of applications such as Console and WebForm applications use their corresponding utility classes

Microsoft's write-up on this subject can be found at Microsoft API and reference catalog.

Do you name your events properly?

Events should end in "ing" or "ed".

public event Action< connectioninformation > ConnectionProblem;❌ Figure: Bad example

public event Action< connectioninformation > ConnectionProblemDetected;✅ Figure: Good example

Do you pre-format your time strings before using TimeSpan.Parse()?

TimeSpan.Parse() constructs a Timespan from a time indicated by a specified string. The acceptable parameters for this function are in the format "d.hh:mm" where "d" is the number of days (it is optional), "hh" is hours and is between 0 and 23 and "mm" is minutes and is between 0 and 59. If you try to pass, as a parameter, as a string such as "45:30" (meaning 45 hours and 30 minutes), TimeSpan.Parse() function will crash. (The exact exception received is: "System.OverflowException: TimeSpan overflowed because duration is too long".) Therefore it is recommended that you should always pre-parse the time string before passing it to the "TimeSpan.Parse()" function.

This pre-parsing is done by the FormatTimeSpanString( ) function. This function will format the input string correctly. Therefore, a time string of value "45:30" will be converted to "1.21:30" (meaning 1 day, 21 hours and 30 minutes). This format is perfectly acceptable for TimeSpan.Parse() function and it will not crash.

ts = TimeSpan.Parse(cboMyComboBox.Text)❌ Figure: Figure: Bad example - A value greater than 24hours will crash eg. 45:30

ts = TimeSpan.Parse(FormatTimeSpanString(cboMyComboBox.Text))✅ Figure: Figure: Good example - Using a wrapper method to pre-parse the string containing the TimeSpan value.

We have a program called SSW Code Auditor to check for this rule.

Do you know not to put Exit Sub before End Sub? (VB)

Do not put "Exit Sub" statements before the "End Sub". The function will end on "End Sub". "Exit Sub" is serving no real purpose here.

Private Sub SomeSubroutine()'Your code here....Exit Sub ' Bad code - Writing Exit Sub before End Sub.End Sub❌ Figure: Bad example

Private Sub SomeOtherSubroutine()'Your code here....End Sub✅ Figure: Good example

We have a program called SSW Code Auditor to check for this rule.

Do you put optional parameters at the end?

Optional parameters should be placed at the end of the method signature as optional ones tend to be less important. You should put the important parameters first.

public void SaveUserProfile([Optional] string username,[Optional] string password,string firstName,string lastName,[Optional] DateTime? birthDate) {}❌ Figure: Figure: Bad example - Username and Password are optional and first - they are less important than firstName and lastName and should be put at the end

public void SaveUserProfile(string firstName,string lastName,[Optional] string username,[Optional] string password,[Optional] DateTime? birthDate) {}✅ Figure: Figure: Good example - All the optional parameters are the end

Note: When using optional parameters, please be sure to use named para meters

Do you refer to form controls directly?

When programming in form based environments one thing to remember is not to refer to form controls directly. The correct way is to pass the controls values that you need through parameters.

There are a number of benefits for doing this:

- Debugging is simpler because all your parameters are in one place

- If for some reason you need to change the control's name then you only have to change it in one place

- The fact that nothing in your function is dependant on outside controls means you could very easily reuse your code in other areas without too many problems re-connecting the parameters being passed in

It's a correct method of programming.

Private Sub Command0_Click()CreateScheduleEnd SubSub CreateSchedule()Dim dteDateStart As DateDim dteDateEnd As DatedteDateStart = Format(Me.ctlDateStart,"dd/mm/yyyy") 'Bad Code - refering the form control directlydteDateEnd = Format(Me.ctlDateEnd, "dd/mm/yyyy").....processing codeEnd Sub❌ Figure: Bad example

Private Sub Command0_Click()CreateSchedule(ctlDateStart, ctlDateEnd)End SubSub CreateSchedule(pdteDateStart As Date, pdteDateEnd As Date)Dim dteDateStart As DateDim dteDateEnd As DatedteDateStart = Format(pdteDateStart, "dd/mm/yyyy") 'Good Code - refering the parameter directlydteDateEnd = Format(pdteDateEnd, "dd/mm/yyyy")&....processing codeEnd Sub✅ Figure: Good example

Do you know how to format your MessageBox code?

You should always write each parameter of MessageBox in a separate line. So it will be more clear to read in the code. Format your message text in code as you want to see on the screen.

Private Sub ShowMyMessage()MessageBox.Show("Areyou sure you want to delete the team project """ + strProjectName+ """?" + Environment.NewLine + Environment.NewLine + "Warning:Deleting a team project cannot be undone.", strProductName + "" + strVersion(), MessageBoxButtons.YesNo, MessageBoxIcon.Warning, MessageBoxDefaultButton.Button2)❌ Figure: Figure: Bad example of MessageBox code format

Private Sub ShowMyMessage()MessageBox.Show( _"Are you sure you want to delete the team project """ + strProjectName + """?"_ + Environment.NewLine _ +Environment.NewLine _ +"Warning: Deleting a team project cannot be undone.", _strProductName + " " + strVersion(), _MessageBoxButtons.YesNo, _MessageBoxIcon.Warning, _MessageBoxDefaultButton.Button2)End Sub✅ Figure: Figure: Good example of MessageBox code format

Do you reference websites when you implement something you found on Google?

If you end up using someone else's code, or even idea, that you found online, make sure you add a reference to this in your source code. There is a good chance that you or your team will revisit the website. And of course, it never hurts to tip your hat, to thank other coders.

private void HideToSystemTray(){// Hide the windows form in the system trayif (FormWindowState.Minimized == WindowState){Hide();}}❌ Figure: Bad example - The website where the solution was found IS NOT referenced in the comments

private void HideToSystemTray(){// Hide the windows form in the system tray// I found this solution at http://www.developer.com/net/csharp/article.php/3336751if (FormWindowState.Minimized == WindowState){Hide();}}✅ Figure: Good example - The website where the solution was found is referenced in the comments

Do you store Application-Level Settings in your database rather than configuration files when possible?

For database applications, it is best to keep application-level values (apart from connection strings) from this in the database rather than in the web.config. There are some merits as following:

- It can be updated easily with normal SQL e.g. Rolling over the current setting to a new value.

- It can be used in joins and in other queries easily without the need to pass in parameters.

- It can be used to update settings and affect the other applications based on the same database.

Do you suffix unit test classes with "Tests"?

Unit test classes should be suffixed with the word "Tests" for better coding readability.

[TestFixture] public class SqlValidatorReportTest { }❌ Figure: Bad example - Unit test class is not suffixed with "Tests"

[TestFixture] public class HtmlDocumentTests { }✅ Figure: Good example - Unit test class is suffixed with "Tests"

We have a program called SSW Code Auditor to check for this rule.

Do you use a helper extension method to raise events?

Enter Intro Text

Instead of:

private void RaiseUpdateOnExistingLotReceived() {if (ExistingLotUpdated != null) {ExistingLotUpdated();}}...use this event extension method:

public static void Raise<t>(this EventHandler<t> @event,object sender,T args) where T : EventArgs {var temp = @event;if (temp != null) {temp(sender, args);}}public static void Raise(this Action @event) {var temp = @event;if (temp != null) {temp();}}That means that instead of calling:

RaiseExistingLotUpdated();...you can do:

ExistingLotUpdated.Raise();Less code = less code to maintain = less code to be blamed for ;)

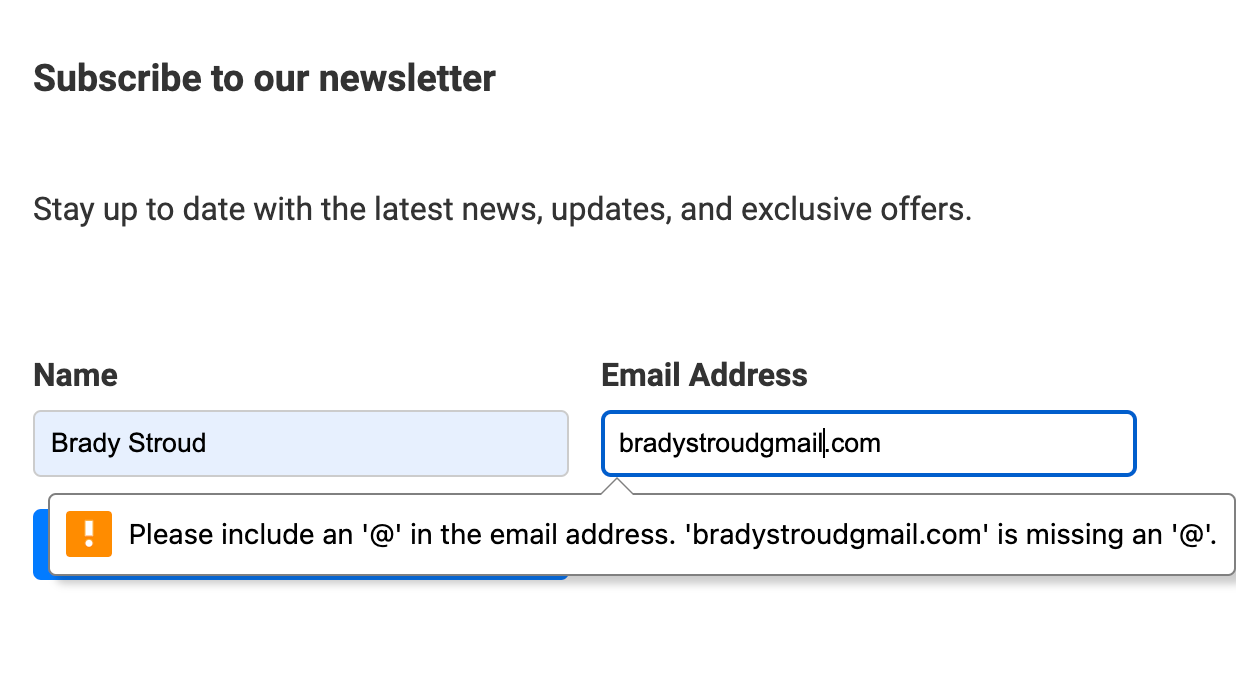

Do you use a regular expression to validate an email address?

A regex is the best way to verify an email address.

public bool IsValidEmail(string email){// Return true if it is in valid email format.if (email.IndexOf("@") <= 0) return false;if (email.EndWith("@")) return false;if (email.IndexOf(".") <= 0) return false;if ( ...}❌ Figure: Figure: Bad example of verify email address

public bool IsValidEmail(string email){// Return true if it is in valid email format.return System.Text.RegularExpressions.Regex.IsMatch( email,@"^([\w-\.]+)@(([[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.)|(([\w-]+\.)+))([a-zA-Z]{2,4}|[0-9]{1,3})(\]?)$";}✅ Figure: Figure: Good example of verify email address

Do you use a regular expression to validate an URL?

A regex is the best way to verify an URI.

public bool IsValidUri(string uri){try{Uri testUri = new Uri(uri);return true;}catch (UriFormatException ex){return false;}}❌ Figure: Figure: Bad example of verifying URI

public bool IsValidUri(string uri){// Return true if it is in valid Uri format.return System.Text.RegularExpressions.Regex.IsMatch( uri,@"^(http|ftp|https)://([^\/][\w-/:]+\.?)+([\w- ./?/:/;/\%&=]+)?(/[\w- ./?/:/;/\%&=]*)?");}✅ Figure: Figure: Good example of verifying URI

You should have unit tests for it, see our Rules to Better Unit Tests for more information.

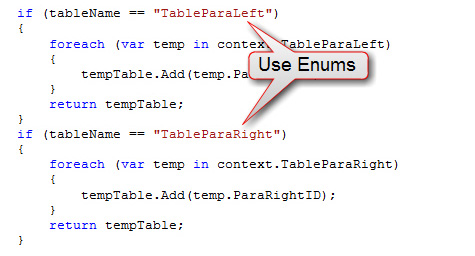

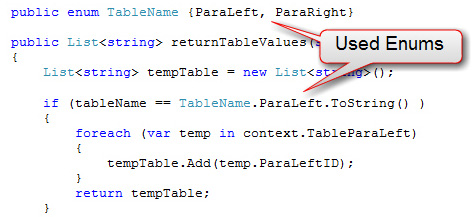

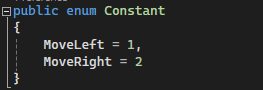

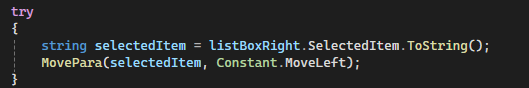

Do you use Enums instead of hard coded strings?

Use Enums instead of hard-coded strings, it makes your code lot cleaner and is really easy to manage .

❌ Figure: Bad example - "Hard- coded string" works, but is a bad idea

✅ Figure: Good example - Used Enums, looks good and is easy to manage

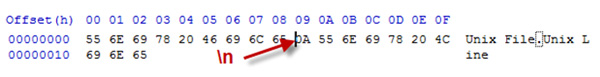

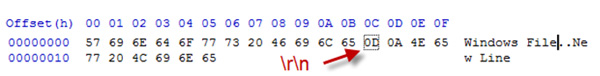

Do you use Environment.NewLine to make a new line in your string?

When you need to create a new line in your string, make sure you use Environment.NewLine, and then literally begin typing your code on a new line for readability purposes.

string strExample = "This is a very long string that is \r\n not properly implementing a new line.";❌ Figure: Bad example - The string has implemented a manual carriage return line feed pair ` `

string strExample = "This is a very long string that is " + Environment.NewLine +" properly implementing a new line.";✅ Figure: OK example - The new line is created with Enviroment.NewLine (but strings are immutable)

var example = new StringBuilder();example.AppendLine("This is a very long string that is ");example.Append(" properly implementing a new line.");✅ Figure: Good example - The new line is created by the StringBuilder and has better memory utilisation

Do you use good code over backward compatibility?

Supporting old operating systems and old versions means you have more (and often messy) code, with lots of if or switch statements. This might be OK for you because you wrote the code, but down the track when someone else is maintaining it, then there is more time/expense needed.

When you realize there is a better way to do something, then you will change it, clean code should be the goal, however, because this affects old users, and changing interfaces at every whim also means an expense for all the apps that break, the decision isn't so easy to make.

Our views on backward compatibility start with asking these questions:

- Question 1: How many apps are we going to break externally?

- Question 2: How many apps are we going to break internally?

- Question 3: What is the cost of providing backward compatibility and repairing (and test) all the broken apps?

Let's look at an example:

If we change the URL of this public Web Service, we'd have to answer the questions as follows:

- Answer 1: Externally - Don't know, we have some leads: We can look at web stats and get an idea. If an IP address enters our website at this point, it tells us that possibly an application is using it and the user isn't just following the links.

- Answer 2: Website samples + Adams code demo

- Answer 3: Can add a redirect or change the page to output a warning Old URL. Please see www.ssw.com.au/ PostCodeWebService for new URL

Because we know that not many external clients use this example, we decide to remove the old web service after some time.

Just to be friendly, we would send an email for the first month, and then another email in the second month. After that, just emit "This is deprecated (old)." We'll also need to update the UDDI so people don't keep coming to our old address.

We probably all prefer working on new features, rather than supporting old code, but it’s still a core part of the job. If your answer to question 3 scares you, it might be time to consider a backward compatibility warning.

From:JohnCc:SSWAllBcc:ZZZSubject:Changing LookOut settingsHi All,

The stored procedure procLookOutClientSelect (currently used only by LookOut any version prior to 10) is being renamed to procSSWLookOutClientIDSelect. The old stored procedure will be removed within 1 month.

You can change your settings either by:

- Going to LookOut Options -> Database tab and select the new stored procedure

- Upgrading to SSW LookOut version 10.0 which will be released later today

✅ Figure: Good Example - Email as a backward compatibility warning

Do you use Public/Protected Properties instead of Public/Protected Fields?

Public/Protected properties have a number of advantages over public/protected fields:

- Data validation Data validation can be performed in the get/set accessors of a public property. This is especially important when working with the Visual Studio .NET Designer.

- Increased flexibility Properties conceal the data storage mechanism from the user, resulting in less broken code when the class is upgraded. Properties are a recommended object-oriented practice for this reason.

- Compatibility with data binding You can only bind to a public property, not a field.

- Minimal performance overhead The performance overhead for public properties is trivial. In some situations, public fields can actually have inferior performance to public properties.

public int Count;❌ Figure: Figure: Bad code - Variable declared as a Field

public int Count{get{return _count;}set{_count = value;}}✅ Figure: Figure: Good code - Variable declared as a Property

We agree that the syntax is tedious and think Microsoft should improve this.

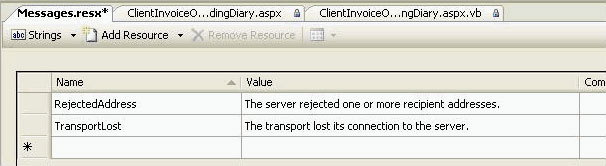

Do you use resource file to store all the messages and globlal strings?

Storing all the messages and global strings in one place will make it easy to manage them and to keep the applications in the same style.

Catch(SqlNullValueException sqlex){Response.Write("The value cannot be null.");}

Catch(SqlNullValueException sqlex){Response.Write("The value cannot be null.");}❌ Figure: Bad example - If you want to change the message, it will cost you lots of time to investigate every try-catch block

Catch(SqlNullValueException sqlex){Response.Write(GetGlobalResourceObject("Messages", "SqlValueNotNull"));}😐 Figure: OK example - Better than the hard code, but still wordy

Catch(SqlNullValueException sqlex){Response.Write(Resources.Messages.SqlValueNotNull); 'Good Code - storing message in resource file.}✅ Figure: Good example

Do you use resource file to store messages?

All messages are stored in one central place so it's easy to reuse. Furthermore, it is strongly typed - easy to type with IntelliSense in Visual Studio.

Module Startup Dim HelloWorld As String = "Hello World!" Sub Main() Console.Write(HelloWorld)Console.Read() End Sub End Module❌ Figure: Bad example of a constant message

::: good\

Figure: Saving constant message in Resource

:::